Resources:

- An Introduction to Probabilistic Graphical Models: Conditional Independence and Factorization (M Jordan)

- An Intro to PGMs: The Elimination Algorithm (M Jordan)

- An Intro to PGMs: Probability Propagation and Factor Graphs

- An Intro to PGMs: The EM algorithm

- An Intro to PGMs: Hidden Markov Models

- A Brief Introduction to Graphical Models and Bayesian Networks (Paper!)

- Deep Learning vs Probabilistic Graphical Models vs Logic (Blog!)

- Probabilistic Graphical Models CS-708 (CMU!)

- Graphical Models, Exponential Families, and Variational Inference (M. Jordan)

Graphical Models

-

Motivation:

Machine learning algorithms often involve probability distributions over a very large number of random variables. Often, these probability distributions involve direct interactions between relatively few variables. Using a single function to describe the entire joint probability distribution can be very inefficient (both computationally and statistically).A description of a probability distribution is exponential in the number of variables it models.

- Graphical Model:

A graphical model or probabilistic graphical model (PGM) or structured probabilistic model is a probabilistic model for which a graph expresses the conditional dependence structure (factorization of a probability distribution) between random variables.Generally, this is one of the most common statistical models

Properties:

- Factorization

- Independence

Graph Structure:

A PGM uses a graph \(\mathcal{G}\) in which each node in the graph corresponds to a random variable, and an edge connecting two r.vs means that the probability distribution is able to represent interactions between those two r.v.s.Types:

- Directed:

Directed models use graphs with directed edges, and they represent factorizations into conditional probability distributions.

They contain one factor for every random variable \(x_i\) in the distribution, and that factor consists of the conditional distribution over \(x_i\) given the parents of \(x_i\). - Undirected:

Undirected models use graphs with undirected edges, and they represent factorizations into a set of functions; unlike in the directed case, these functions are usually not probability distributions of any kind.

Core Idea of Graphical Models:

The probability distribution factorizes according to the cliques in the graph, with the potentials usually being of the exponential family (and a graph expresses the conditional dependence structure between random variables).

- Neural Networks and Graphical Models:

Deep NNs as PGMs:

You can view a deep neural network as a graphical model, but here, the CPDs are not probabilistic but are deterministic. Consider for example that the input to a neuron is \(\vec{x}\) and the output of the neuron is \(y .\) In the CPD for this neuron we have, \(p(\vec{x}, y)=1,\) and \(p(\vec{x}, \hat{y})=0\) for \(\hat{y} \neq y .\) Refer to the section 10.2 .3 of Deep Learning Book for more details.

Bayesian Network

- Bayesian Network:

A Bayesian network, Bayes network, belief network, or probabilistic directed acyclic graphical model is a probabilistic graphical model that represents a set of variables and their conditional dependencies via a directed acyclic graph (DAG).E.g. a Bayesian network could represent the probabilistic relationships between diseases and symptoms.

Bayes Nets (big picture):

Bayes Nets: a technique for describing complex joint distributions (models) using simple, local distributions (conditional probabilities).In other words, they are a device for describing a complex distribution, over a large number of variables, that is built up of small pieces (local interactions); with the assumptions necessary to conclude that the product of those local interactions describe the whole domain.

Formally, a Bayes Net consists of:

- A directed acyclic graph of nodes, one per variable \(X\)

- A conditional distribution for each node \(P(X\vert A_1\ldots A_n)\), where \(A_i\) is the \(i\)th parent of \(X\), stored as a conditional probability table or CPT.

Each CPT has \(n+2\) columns: one for the values of each of the \(n\) parent variables \(A_1 \ldots A_n\), one for the values of \(X\), and one for the conditional probability of \(X\).

Each node in the graph represents a single random variable and each directed edge represents one of the conditional probability distributions we choose to store (i.e. an edge from node \(A\) to node \(B\) indicates that we store the probability table for \(P(B\vert A)\)).

Each node is conditionally independent of all its ancestor nodes in the graph, given all of its parents. Thus, if we have a node representing variable \(X\), we store \(P(X\vert A_1,A_2,...,A_N)\), where \(A_1,\ldots,A_N\) are the parents of \(X\).The local probability tables (of conditional distributions) and the DAG together encode enough information to compute any probability distribution that we could have otherwise computed given the entire joint distribution.

Motivation:

There are problems with using full join distribution tables as our probabilistic models:- Unless there are only a few variables, the joint is WAY too big to represent explicitly

- Hard to learn (estimate) anything empirically about more than a few variables at a time

Examples of Bayes Nets:

- Coin Flips:

img1 - Traffic:

img2 - Traffic II:

img3 - Alarm Network:

img4

Probabilities in BNs:

- Bayes Nets implicitly encode joint distributions:

Encoded as a product of local conditional distributions:$$p(x_1, x_2, \ldots, x_n) = \prod_{i=1}^{n} p(x_i \vert \text{parents}(X_i)) \tag{1.1}$$

- We are guaranteed that \(1.1\) results in a proper joint distribution:

- Chain Rule is valid for all distributions:

$$p(x_1, x_2, \ldots, x_n) = \prod_{i=1}^{n} p(x_i \vert x_1, \ldots, x_{i-1})$$

- Conditional Independences Assumption:

$$p(x_1, x_2, \ldots, x_{i-1}) = \prod_{i=1}^{n} p(x_i \vert \text{parents}(X_i))$$

\(\implies\)

$$p(x_1, x_2, \ldots, x_n) = \prod_{i=1}^{n} p(x_i \vert \text{parents}(X_i)) \tag{1.1}$$

- Chain Rule is valid for all distributions:

Thus (from above) Not every BN can represent every joint distribution.

The topology enforces certain conditional independencies that need to be met.e.g. Only distributions whose variables are absolutely independent can be represented by a Bayes Net with no arcs

Causality:

Although the structure of the BN might be in a way that encodes causality, it is not necessary to define the joint distribution graphically. The two definitions below are the same:

img5

To summarize:- When BNs reflect the true causal patterns:

- Often simpler (nodes have fewer parents)

- Often easier to think about

- Often easier to elicit from experts

- BNs need NOT be causal:

- Sometimes no causal net exists over the domain (especially if variables are missing)

- e.g. consider the variables \(\text{Traffic}\) and \(\text{Drips}\)

- Results in arrows that reflect correlation, not causation

- Sometimes no causal net exists over the domain (especially if variables are missing)

- The meaning of the arrows:

- The topology may happen to encode causal structure

- But, the topology really encodes conditional independence

$$p(x_1, x_2, \ldots, x_{i-1}) = \prod_{i=1}^{n} p(x_i \vert \text{parents}(X_i))$$

Questions we can ask

Since a BN encodes a joint distribution we can ask any questions a joint distribution can answer:- Inference: given a fixed BN, what is \(P(X \vert \text { e)? }\)

- Representation: given a BN graph, what kinds of distributions can it encode?

- Modeling: what BN is most appropriate for a given domain?

Size of a BN:

- Size of a full joint distribution over \(N\) (boolean) variables: \(2^N\)

- Size of an \(N\)-node net with nodes having up to \(k\) parents: \(\mathcal{O}(N \times 2^{k+1}\)

Advantages of BN over full joint distribution:

- Both will model (calculate) \(p(X_1, X_2, \ldots, X_N)\)

- BNs give you huge space savings

- It is easier to elicit local CPTs

- Is faster to answer queries

Notes:

- The acyclicity gives an order to the (order-less) chain-rule of conditional probabilities

- Think of the conditional distribution for each node as a description of a noisy “causal” process

- The graph of the BN represents certain independencies directly, but also contains extra independence assumptions that can be “inferred” from the shape of the graph

- There could be extra independence relationships in the distribution that are not represented by the BN graph structure but can be read in the CPT. This happens when the structure of the BN is not “optimal” for the assumptions between the variables.

- Independence / D-Separation:

Goal is to find a graph algorithm that can show independencies between variables in BNs. Steps:- Study independence properties for triples

- Analyze complex cases (configurations) in terms of member triples

- D-separation: a condition / algorithm for answering such queries

Causal Chains:

A Causal Chain is a configuration of three nodes that expresses the following representation of the joint distribution over \(X\), \(Y\) and \(Z\):$$P(x, y, z)=P(z \vert y) P(y \vert x) P(x)$$

Let’s try to see if we can guarantee independence between \(X\) and \(Z\):

- No Observations:

\(X\) and \(Z\) are not guaranteed independent.- Proof:

By Counterexample:$$P(y \vert x)=\left\{\begin{array}{ll}{1} & {\text { if } x=y} \\ {0} & {\text { else }}\end{array} \quad P(z \vert y)=\left\{\begin{array}{ll}{1} & {\text { if } z=y} \\ {0} & {\text { else }}\end{array}\right.\right.$$

\(\text { In this case, } P(z \vert x)=1 \text { if } x=z \text { and } 0 \text { otherwise, so } X \text { and } Z \text { are not independent.}\)

- Proof:

- \(Y\) Observed:

\(X\) and \(Z\) are independent given \(Y\). i.e. \(P(X \vert Z, Y)=P(X \vert Y)\)- Proof:

$$\begin{aligned} P(X \vert Z, y) &=\frac{P(X, Z, y)}{P(Z, y)}=\frac{P(Z \vert y) P(y \vert X) P(X)}{\sum_{x} P(X, y, Z)}=\frac{P(Z \vert y) P(y \vert X) P(X)}{P(Z \vert y) \sum_{x} P(y \vert x) P(x)} \\ &=\frac{P(y \vert X) P(X)}{\sum_{x} P(y \vert x) P(x)}=\frac{P(y \vert X) P(X)}{P(y)}=P(X \vert y) \end{aligned}$$

An analogous proof can be used to show the same thing for the case where X has multiple parents.

- Proof:

To summarize, in the causal chain chain configuration, \(X \perp Z \vert Y\) .

Evidence along the chain “blocks” the influence.

Common Cause:

Common Cause is another configuration for a triple. It expresses the following representation:$$P(x, y, z)=P(x \vert y) P(z \vert y) P(y)$$

Let’s try to see if we can guarantee independence between \(X\) and \(Z\):

- No Observations:

\(X\) and \(Z\) are not guaranteed independent.- Proof:

By Counterexample:$$P(x \vert y)=\left\{\begin{array}{ll}{1} & {\text { if } x=y} \\ {0} & {\text { else }}\end{array} \quad P(z \vert y)=\left\{\begin{array}{ll}{1} & {\text { if } z=y} \\ {0} & {\text { else }}\end{array}\right.\right.$$

\text { Then } P(x \vert z)=1 \text { if } x=z \text { and } 0 \text { otherwise, so } X \text { and } Z \text { are not independent. }

- Proof:

- \(Y\) Observed:

\(X\) and \(Z\) are independent given \(Y\). i.e. \(P(X \vert Z, Y)=P(X \vert Y)\)- Proof:

$$P(X \vert Z, y)=\frac{P(X, Z, y)}{P(Z, y)}=\frac{P(X \vert y) P(Z \vert y) P(y)}{P(Z \vert y) P(y)}=P(X \vert y)$$

- Proof:

Observing the cause blocks the influence.

Common Effect:

Common Effect is the last configuration for a triplet. Expressing the representation:$$P(x, y, z)=P(y \vert x, z) P(x) P(z)$$

Let’s try to see if we can guarantee independence between \(X\) and \(Z\):

-

No Observations:

\(X\) and \(Z\) are, readily, guaranteed to be independent: \(X \perp Z\). -

\(Y\) Observed:

\(X\) and \(Z\) are not necessarily independent given \(Y\). i.e. \(P(X \vert Z, Y)\neq P(X \vert Y)\)- Proof:

By Counterexample:

\(\text { Suppose all three are binary variables. } X \text { and } Z \text { are true and false with equal probability: }\)$$\begin{array}{l}{P(X=\text {true})=P(X=\text { false })=0.5} \\ {P(Z=\text {true})=P(Z=\text { false })=0.5}\end{array}$$

\(\text { and } Y \text { is determined by whether } X \text { and } Z \text { have the same value: }\)

$$P(Y \vert X, Z)=\left\{\begin{array}{ll}{1} & {\text { if } X=Z \text { and } Y=\text { true }} \\ {1} & {\text { if } X \neq Z \text { and } Y=\text { false }} \\ {0} & {\text { else }}\end{array}\right.$$

\(\text { Then } X \text { and } Z \text { are independent if } Y \text { is unobserved. But if } Y \text { is observed, then knowing } X \text { will }\\ \text { tell us the value } {\text { of } Z, \text { and vice-versa. } \text{So } X \text { and } Z \text { are } \text {not} \text { conditionally independent given } Y \text {. }}\)

- Proof:

Common Effect can be viewed as “opposite” to Causal Chains and Common Cause-\(X\) and \(Z\) are guaranteed to be independent if \(Y\) is not conditioned on. But when conditioned on \(Y, X\) and \(Z\) may be dependent depending on the specific probability values for \(P(Y \vert X, Z)\)).

This same logic applies when conditioning on descendants of \(Y\) in the graph. If one of \(Y\) ‘s descendent nodes is observed, as in Figure \(7, X\) and \(Z\) are not guaranteed to be independent.

Observing an effect activates influence between possible causes.

General Case, and D-Separation:

We can use the previous three cases as building blocks to help us answer conditional independence questions on an arbitrary Bayes’ Net with more than three nodes and two edges. We formulate the problem as follows:

Given a Bayes Net \(G,\) two nodes \(X\) and \(Y,\) and a (possibly empty) set of nodes \(\left\{Z_{1}, \ldots Z_{k}\right\}\) that represent observed variables, must the following statement be true: \(X \perp Y |\left\{Z_{1}, \ldots Z_{k}\right\} ?\)D-Separation: (directed separation) is a property of the structure of the Bayes Net graph that implies this conditional independence relationship, and generalizes the cases we’ve seen above. If a set of variables \(Z_{1}, \cdots Z_{k} d-\) -separates \(X\) and \(Y,\) then \(X \perp Y \vert\left\{Z_{1}, \cdots Z_{k}\right\}\) in all possibutions that can be encoded by the Bayes net.

D-Separation Algorithm:

- Shade all observed nodes \(\left\{Z_{1}, \ldots, Z_{k}\right\}\) in the graph.

- Enumerate all undirected paths from \(X\) to \(Y\) .

- For each path:

- Decompose the path into triples (segments of 3 nodes).

- If all triples are active, this path is active and \(d\) -connects \(X\) to \(Y\).

- If no path d-connects \(X\) and \(Y\) and \(Y\) are d-separated, so they are conditionally independent given \(\left\{Z_{1}, \ldots, Z_{k}\right\}\)

Any path in a graph from \(X\) to \(Y\) can be decomposed into a set of 3 consecutive nodes and 2 edges - each of which is called a triple. A triple is active or inactive depending on whether or not the middle node is observed. If all triples in a path are active, then the path is active and \(d\) -connects \(X\) to \(Y,\) meaning \(X\) is not guaranteed to be conditionally independent of \(Y\) given the observed nodes. If all paths from \(X\) to \(Y\) are inactive, then \(X\) and \(Y\) are conditionally independent given the observed nodes.

Active Triples: We can enumerate all possibilities of active and inactive triples using the three canonical graphs we presented above in Figure 8 and 9.

Examples:

Structure Implications:

Given a Bayes net structure, can run d-separation algorithm to build a complete list of conditional independences that are necessarily true of the form.$$X_{i} \perp X_{j} |\left\{X_{k_{1}}, \ldots, X_{k_{n}}\right\}$$

This list determines the set of probability distributions that can be represented.

Topology Limits Distributions:

- Given some graph topology \(G\), only certain joint distributions can be encoded

- The graph structure guarantees certain (conditional) independences

- (There might be more independence)

- Adding arcs increases the set of distributions, but has several costs

- Full conditioning can encode any distribution

- The more assumptions you make the fewer the number of distributions you can represent

Bayes Nets Representation Summary:

- Bayes nets compactly encode joint distributions

- Guaranteed independencies of distributions can be deduced from BN graph structure

- D-separation gives precise conditional independence guarantees from graph alone

- A Bayes nets joint distribution may have further (conditional) independence that is not detectable until you inspect its specific distribution

-

Inference:

Lecture (188)Inference is the process of calculating the joint PDF for some set of query variables based on some set of observed variables.

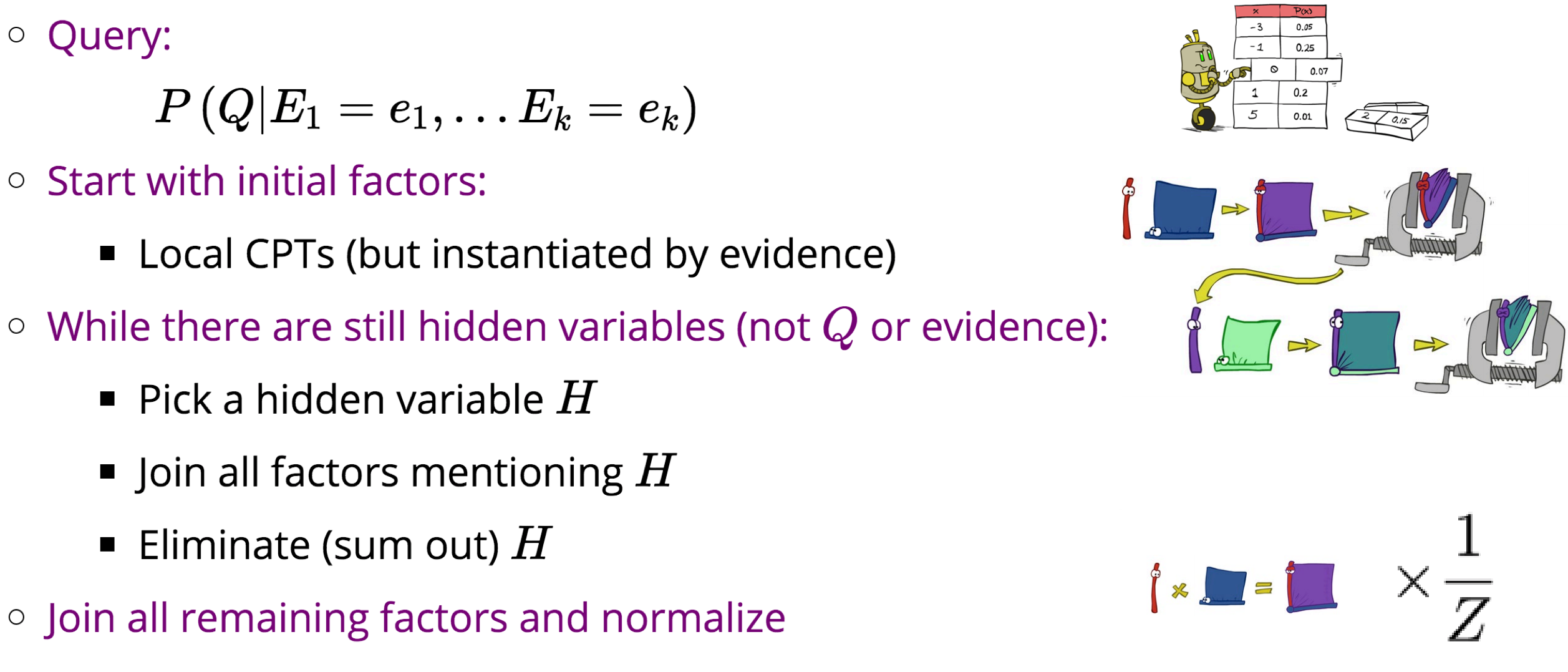

For example:- Posterior Probability inference:

$$P\left(Q \vert E_{1}=e_{1}, \ldots E_{k}=e_{k}\right)$$

- Most Likely Explanation inference:

$$\operatorname{argmax}_{q} P\left(Q=q \vert E_{1}=e_{1} \ldots\right)$$

Notation - General Case:

$$ \left.\begin{array}{ll}{\textbf { Evidence variables: }} & {E_{1} \ldots E_{k}=e_{1} \ldots e_{k}} \\ {\textbf { Query}^{* } \textbf { variable: }} & {Q} \\ {\textbf { Hidden variables: }} & {H_{1} \ldots H_{r}}\end{array}\right\} \begin{array}{l}{X_{1}, X_{2}, \ldots X_{n}} \\ {\text { All variables }}\end{array} $$

Inference by Enumeration:

We can solve this problem naively by forming the joint PDF and using inference by enumeration as described above. This requires the creation of and iteration over an exponentially large table.

Algorithm:- Select the entries consistent with the evidence

- Sum out \(\mathrm{H}\) to get join of Query and evidence

- Normalize: \(\times \dfrac{1}{Z} = \dfrac{1}{\text{sum of entries}}\)

- Inference by Enumeration (188)

Variable Elimination:

Alternatively, we can use Variable Elimination: eliminate variables one by one.

To eliminate a variable \(X\), we:- Join (multiply together) all factors involving \(X\).

- Sum out \(X\).

A factor is an unnormalized probability; represented as a multidimensional array:

- Joint Distributions: \(P(X,Y) \in \mathbb{R}^2\)

- Entries \(P(x, y)\) for all \(x, y\)

- Sums to \(1\)

- Selected Joint: \(P(x,Y) \in \mathbb{R}\)

- A slice of the joint distribution

- Entries \({P}({x}, {y})\) for fixed \({x},\) all \({y}\)

- Sums to \(P(x)\)

- Single Conditional: \(P(Y \vert x)\)

- Entries \({P}({y} \vert {x})\) for fixed \({x},\) all \({y}\)

- Sums to \(1\)

- Family of Conditionals: \(P(Y \vert X)\)

- Multiple Conditionals

- Entries \({P}({y} \vert {x})\) for all \({x}, {y}\)

- Sums to \(\vert X\vert\)

- Specified family: \(P(y \vert X)\)

- Entries \(P(y \vert x)\) for fixed \(y\) but for all \(x\)

- Sums to random number; not a distribution

For Joint Distributions, the # capital variables dictates the “dimensionality” of the array.

At all points during variable elimination, each factor will be proportional to the probability it corresponds to but the underlying distribution for each factor won’t necessarily sum to \(1\) as a probability distribution should.

Inference by Enumeration vs. Variable Elimination:

Inference by Enumeration is very slow: You must join up the whole joint distribution before you sum out the hidden variables.

Variable Elimination: Interleave joining and marginalization.

Still NP-hard, but usually much faster.

Notice that \(\sum_r P(r) P(t \vert r) = P(t)\), thus, in VE, you end up with \(\sum_{t} P(L \vert t) P(t)\).General Variable Elimination - Algorithm:

VE - Computational and Space Complexity:

- The computational and space complexity of variable elimination is determined by the largest factor.

- The elimination ordering can greatly affect the size of the largest factor.

- There does NOT always exist an ordering that only results in small factors.

- VE is NP-Hard:

- Proof:

We can reduce 3-Sat to a BN-Inference problem.

We can encode a Constrained Satisfiability Problem (CSP) in a BN and use it to give a solution to the CSP; if the CSP consists of 3 clauses, then finding a solution for the CSP via BN-Inference is equivalent to solving 3-Sat.

- Proof:

- Thus, inference in Bayes’ nets is NP-hard.

No known efficient probabilistic inference in general.

Polytrees:

A Polytree is a directed graph with no undirected cycles.

For polytrees we can always find an ordering that is efficient.- Cut-set conditioning for Bayes’ net inference: Choose set of variables such that if removed only a polytree remains.

- Posterior Probability inference:

-

Sampling:

Sampling is the process of generating observations/samples from a distribution.

- Sampling is like doing repeated (probabilistic) simulation.

- Sampling could be used for learning (e.g. RL). But in the context of BNs, it is used for Inference.

- Sampling provides a way to do efficient inference, by presenting us with a tradeoff between accuracy and computation (time).

- The Goal is to prove that as the number of samples you generate \(N\) goes to \(\infty\), the approximation converges to the true probability you are trying to compute.

- Using sampling in a BN from the entire network is necessary, because listing all the outcomes is too expensive even if we can create them given infinite time.Idea/Algorithm for Inference:

- Draw \(N\) samples from a sampling distribution \(S\)

- Compute an approximate posterior probability

- Show this converges to the true probability \(P\)

Sampling from a given distribution:

- Get sample \(u\) from uniform distribution over \([0,1)\)

- Convert this sample \(u\) into an outcome for the given distribution by having each target outcome associated with a sub-interval of \([0,1)\) with sub-interval size equal to probability of the outcome

Sampling Algorithms in BNs:

- Prior Sampling

- Rejection Sampling

- Likelihood Weighting

- Gibbs Sampling

Prior Sampling:

Algorithm:

- For \(i=1,2, \ldots, n\)

- Sample \(x_{i}\) from \(P\left(X_{i} \vert \text { Parents }\left(X_{i}\right)\right)\)

- Return \(\left(x_{1}, x_{2}, \ldots, x_{n}\right)\)

Notes:

- This process generates samples with probability:

$$ \begin{aligned} S_{P S}\left(x_{1} \ldots x_{n}\right)=\prod_{i=1}^{n} P\left(x_{i} \vert \text { Parents }\left(X_{i}\right)\right)=P\left(x_{1} \ldots x_{n}\right) \\ \text { ...i.e. the BN's joint probability } \end{aligned} $$

- Let the number of samples of an event be \(N_{P S}\left(x_{1} \cdots x_{n}\right)\)

- Thus,

$$\begin{aligned} \lim _{N \rightarrow \infty} \widehat{P}\left(x_{1}, \ldots, x_{n}\right) &=\lim _{N \rightarrow \infty} N_{P S}\left(x_{1}, \ldots, x_{n}\right) / N \\ &=S_{P S}\left(x_{1}, \ldots, x_{n}\right) \\ &=P\left(x_{1} \ldots x_{n}\right) \end{aligned}$$

- I.e., the sampling procedure is consistent

Rejection Sampling:

Rejection SamplingIt is also consistent.

Idea:

Same as Prior Sampling, but no point in keeping all of the samples. We just tally the outcomes that match our evidence and reject the rest.

Algorithm:

- Input: evidence instantiation

- For \(i=1,2, \ldots, n\)

- Sample \(x_{i}\) from \(P\left(X_{i} \vert \text { Parents }\left(X_{i}\right)\right)\)

- If $x_{i}$ not consistent with evidence

- Reject: return - no sample is generated in this cycle

- Return \(\left(x_{1}, x_{2}, \ldots, x_{n}\right)\)

Likelihood Weighting:

Likelihood WeightingKey Ideas:

Fixes a problem with Rejection Sampling:

- If evidence is unlikely, rejects lots of samples

- Evidence not exploited as you sample

Idea: fix evidence variables and sample the rest. - Problem: sample distribution not consistent!

- Solution: weight by probability of evidence given parents.

Algorithm:

- Input: evidence instantiation

- \[w=1.0\]

- for \(i=1,2, \dots, n\)

- if \(\mathrm{x}_ {\mathrm{i}}\) is an evidence variable

- \(\mathrm{n} \mathrm{x} _ {\mathrm{i}}=\) observation \(\mathrm{x}_ {\mathrm{i}}\) for \(\mathrm{x}_ {\mathrm{i}}\)

- \[\operatorname{set} \mathrm{w}=\mathrm{w} * \mathrm{P}\left(\mathrm{x}_ {\mathrm{i}} \vert \text { Parents(X.) }\right.\]

- else

- Sample \(x_ i\) from \(P\left(X _ {i} \vert \text { Parents }\left(X _ {i}\right)\right)\)

- if \(\mathrm{x}_ {\mathrm{i}}\) is an evidence variable

- Return \(\left(\mathrm{x}_ {1}, \mathrm{x}_ {2}, \ldots, \mathrm{x}_ {\mathrm{n}}\right), \mathrm{w}\)

Notes:

- Sampling distribution if \(z\) sampled and \(e\) fixed evidence

$$S_{W S}(\mathbf{z}, \mathbf{e})=\prod_{i=1}^{l} P\left(z_{i} \vert \text { Parents }\left(Z_{i}\right)\right)$$

- Now, samples have weights

$$w(\mathbf{z}, \mathbf{e})=\prod_{i=1}^{m} P\left(e_{i} \vert \text { Parents }\left(E_{i}\right)\right)$$

- Together, weighted sampling distribution is consistent

$$\begin{aligned} S_{\mathrm{WS}}(z, e) \cdot w(z, e) &=\prod_{i=1}^{l} P\left(z_{i} \vert \text { Parents }\left(z_{i}\right)\right) \prod_{i=1}^{m} P\left(e_{i} \vert \text { Parents }\left(e_{i}\right)\right) \\ &=P(\mathrm{z}, \mathrm{e}) \end{aligned}$$

- Likelihood weighting is good

- We have taken evidence into account as we generate the sample

- E.g. here, \(W\)’s value will get picked based on the evidence values of \(S\), \(R\)

- More of our samples will reflect the state of the world suggested by the evidence

- Likelihood weighting doesn’t solve all our problems

- Evidence influences the choice of downstream variables, but not upstream ones (C isn’t more likely to get a value matching the evidence)

- We would like to consider evidence when we sample every variable (leads to Gibbs sampling)

Gibbs Sampling:

Gibbs Sampling- Procedure: keep track of a full instantiation \(x_1, x_2, \ldots, x_n\). Start with an arbitrary instantiation consistent with the evidence. Sample one variable at a time, conditioned on all the rest, but keep evidence fixed. Keep repeating this for a long time.

- Property: in the limit of repeating this infinitely many times the resulting samples come from the correct distribution (i.e. conditioned on evidence).

- Rationale: both upstream and downstream variables condition on evidence.

-

In contrast: likelihood weighting only conditions on upstream evidence, and hence weights obtained in likelihood weighting can sometimes be very small. Sum of weights over all samples is indicative of how many “effective” samples were obtained, so we want high weight.

- Gibbs sampling produces sample from the query distribution \(P(Q \vert \text { e })\) in limit of re-sampling infinitely often

- Gibbs sampling is a special case of more general methods called Markov chain Monte Carlo (MCMC) methods

- Metropolis-Hastings is one of the more famous MCMC methods (in fact, Gibbs sampling is a special case of Metropolis-Hastings)

- Monte Carlo Methods are just sampling

Algorithm by Example:

Efficient Resampling of One Variable:

- Sample from \(\mathrm{P}(\mathrm{S} \vert+\mathrm{c},+\mathrm{r},-\mathrm{w})\):

$$\begin{aligned} P(S \vert+c,+r,-w) &=\frac{P(S,+c,+r,-w)}{P(+c,+r,-w)} \\ &=\frac{P(S,+c,+r,-w)}{\sum_{s} P(s,+c,+r,-w)} \\ &=\frac{P(+c) P(S \vert+c) P(+r \vert+c) P(-w \vert S,+r)}{\sum_{s} P(+c) P(s \vert+c) P(+r \vert+c) P(-w \vert s,+r)} \\ &=\frac{P(+c) P(S \vert+c) P(+r \vert+c) P(-w \vert S,+r)}{P(+c) P(+r \vert+c) \sum_{s} P(s \vert+c)} \\ &=\frac{P(S \vert+c) P(-w \vert S,+r)}{\sum_{s} P(s \vert+c) P(-w \vert s,+r)} \end{aligned}$$

- Many things cancel out – only CPTs with \(S\) remain!

- More generally: only CPTs that have resampled variable need to be considered, and joined together

Bayes’ Net Sampling Summary:

- Decision Networks / VPI (Value of Perfect Information):

Decision Networks / VPI (188)

Random Field Techniques

- An Introduction to Conditional Random Fields & graphical models (Thesis!)

- Classical Probabilistic Models and Conditional Random Fields (Technical Report!)

- HMM, MEMM, and CRF: A Comparative Analysis of Statistical Modeling Methods (Blog)

- Intro to Conditional Random Field (blog!)

-

Random Field:

A Random Field is a random function over an arbitrary domain (usually a multi-dimensional space such as \(\mathbb{R}^{n}\) ). That is, it is a function \(f(x)\) that takes on a random value at each point \(x \in \mathbb{R}^{n}\) (or some other domain). It is also sometimes thought of as a synonym for a stochastic process with some restriction on its index set. That is, by modern definitions, a random field is a generalization of a stochastic process where the underlying parameter need no longer be real or integer valued “but can instead take values that are multidimensional vectors on some manifold.Formally

Given a probability space \((\Omega, \mathcal{F}, P),\) an \(X\) -valued random field is a collection of \(X\) -valued random variables indexed by elements in a topological space \(T\). That is, a random field \(F\) is a collection$$\left\{F_{t} : t \in T\right\}$$

where each \(F_{t}\) is an \(X\)-valued random variable.

Notes:

- Random Field (wiki)

- Generative vs Discriminative Models for Sequence Labeling Tasks:

Generative model makes more restrictive assumption about the distribution of \(x\).

“Unlike traditional generative random fields, CRFs only model the conditional distribution \(p(t | x)\) and do not explicitly model the marginal \(p(x)\). Note that the labels \(t i\) are globally conditioned on the whole observation \(x\) in CRF. Thus, we do not assume that the observed data \(x\) are conditionally independent as in a generative random field.” - Minka