Table of Contents

Introduction

-

- NLP:

- Natural Language Processing is a field at the intersection of computer science, artificial intelligence, and linguistics concerned with the interactions between computers and human (natural) languages, and, in particular, concerned with programming computers to fruitfully process large natural language data.

-

- Problems in NLP:

-

- Question Answering (QA): a system that provides answers to natural language questions

- Information Extraction (IE): the task of automatically extracting structured information from unstructured or semi-structured data

- Semantic Annotation: Semantically enhanced information extraction (AKA semantic annotation) couples those entities with their semantic descriptions and connections from a knowledge graph. By adding metadata to the extracted concepts, this technology solves many challenges in enterprise content management and knowledge discovery.

- Sentiment Analysis: the task of determining the underlying sentiment/emotion associated with a piece of text.

- Machine Translation (MT): the task of automatically translating text from one language to another

- Spam Detection: the task of detecting possible spam/irrelevant input from a set of inputs

- Parts-of-Speech (POS) Tagging: the task of assigning the

- Named Entity Recognition (NER): the extraction of known entities from a document (depending on the domain).

- Coreference Resolution: the task of resolving the subject and object being referred to in an ambiguous sentence.

- Word Sense Disambiguation (WSD): the task of determining the appropriate definition of ambiguous words based on the context they occur in.

- Parsing: Parsing, syntax analysis, or syntactic analysis is the process of analyzing a string of symbols, either in natural language, computer languages or data structures, conforming to the rules of a formal grammar.

- Paraphrasing: the task of rewording (transforming/translating) a document to a more suitable form while retaining the original information conveyed in the input.

- Summarization: the task of distilling the most important/relevant information in a document in a short and clear form.

- Dialog: a chatbot-like system that is capable of conversing using natural language.

- Language Modeling: the problem of inferring a probability distribution that describes a particular language.

- Text Classification: the problem of classifying text inputs into pre-determined classes/categories.

- Topic Modeling:

- Text Similarity:

Fully understanding and representing the meaning of language (or even defining it) is a difficult goal.

- Perfect language understanding is AI-complete

-

- (mostly) Solved Problems in NLP:

-

- Spam Detection

- Parts-of-Speech (POS) Tagging

- Named Entity Recognition (NER)

-

- Within-Reach Problems:

-

- Sentiment Analysis

- Coreference Resolution

- Word Sense Disambiguation (WSD)

- Parsing

- Machine Translation (MT)

- Information Extraction (IE)

- Dialog

- Question Answering (QA)

-

- Open Problems in NLP:

-

- Paraphrasing

- Summarization

-

- Issues in NLP (why nlp is hard?):

-

- Non-Standard English: “Great Job @ahmed_badary! I luv u 2!! were SOO PROUD of dis.”

- Segmentation Issues: “New York-New Haven” vs “New-York New-Haven”

- Idioms: “dark horse”, “getting cold feet”, “losing face”

- Neologisms: “unfriend”, “retweet”, “google”, “bromance”

- World Knowledge: “Ahmed and Zach are brothers”, “Ahmed and Zach are fathers”

- Tricky Entity Names: “Where is Life of Pie playing tonight?”, “Let it be was a hit song!”

-

- Tools we need for NLP:

-

- Knowledge about Language.

- Knowledge about the World.

- A way to combine knowledge sources.

-

- Methods:

- In general we need to construct Probabilistic Models built from language data.

- We do so by using rough text features.

All the names models, methods, and tools mentioned above will be introduced later as you progress in the text.

-

- NLP in the Industry:

-

- Search

- Online ad Matching

- Automated/Assisted Translation

- Sentiment analysis for marketing or finance/trading

- Speech recognition

- Chatbots/Dialog Agents

- Automatic Customer Support

- Controlling Devices

- Ordering Goods

NLP and Deep Learning

-

- What is Special about Human Language:

-

- Human Language is a system specifically constructed to convey the speaker’s/writer’s meaning.

It is a deliberate communication, not just an environmental signal.

- Human Language is a discrete/symbolic/categorical signaling system

- The categorical symbols of a language can be encoded as a signal for communication in several ways:

- Sound

- Gesture

- Images (Writing)

Yet, the symbol is invariant across different encodings!

- However, a brain encoding appears to be a continuous pattern of activation, and the symbols are transmitted via continuous signals of sound/vision.

- Human Language is a system specifically constructed to convey the speaker’s/writer’s meaning.

-

- Issues of NLP in Machine Learning:

-

- According to the paragraph above, we see that although human language is largely symbolic, it is still interpreted by the brain as a continuous signal.

This means that we cannot encode this information in a discrete manner; but rather must learn in a sequential, continuous way. - The large vocabulary and symbolic encoding of words create a problem for machine learning – sparsity!

- According to the paragraph above, we see that although human language is largely symbolic, it is still interpreted by the brain as a continuous signal.

-

- Machine Learning vs Deep Learning:

-

- Most Machine Learning methods work well because of human-designed representations and input features.

Thus, the learning here is done, mostly, by the people/scientists/engineers who are designing the features and not by the machines.

This rendered Machine Learning to become just a numerical optimization method for optimizing weights to best make a final prediction.

- Most Machine Learning methods work well because of human-designed representations and input features.

-

- How does that differ with Deep Learning (DL)?

- Representation learning attempts to automatically learn good features or representations

- Deep learning algorithms attempt to learn (multiple levels of) representation and an output

- Raw Inputs: DL can deal directly with raw inputs (e.g. sound, characters, words)

- How does that differ with Deep Learning (DL)?

-

- Why Deep-Learning?

-

- Manually designed features are often over-specified, incomplete and take a long time to design and validate

- Manually designed features are often over-specified, incomplete and take a long time to design and validate

- Deep learning provides a very flexible, (almost?) universal, learnable framework for representing world, visual and linguistic information.

- Deep learning can learn unsupervised (from raw text) and supervised (with specific labels like positive/negative)

- In ~2010 deep learning techniques started outperforming other machine learning techniques.

-

- Why is NLP Hard (revisited):

-

- Complexity in representing, learning and using linguistic/situational/world/visual knowledge

- Human languages are ambiguous (unlike programming and other formal languages)

- Human language interpretation depends on real world, common sense, and contextual knowledge

-

- Improvements in Recent Years in NLP:

- spanning different:

- Levels: speech, words, syntax, semantics

- Tools: POS, entities, parsing

- Applications: MT, sentiment analysis, dialogue agents, QA

Representations of NLP Levels

-

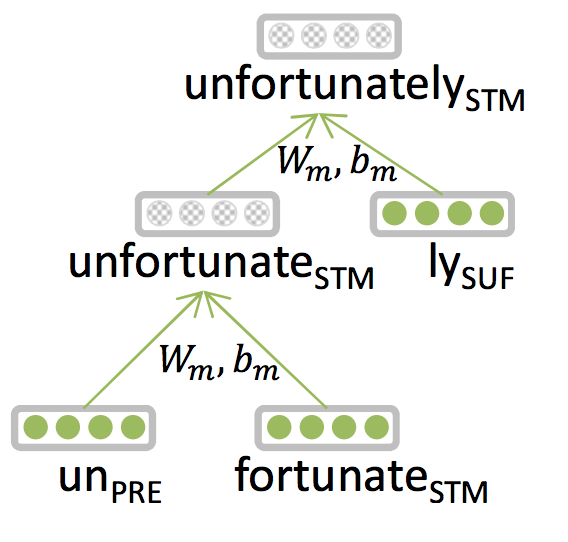

- Morphology:

-

- Traditionally: Words are made of morphemes.

- uninterested -> un (prefix) + interest (stem) + ed (suffix)

- DL:

- Every morpheme is a vectors

- A Neural Network combines two vectors into one vector

- Luong et al. 2013

- Traditionally: Words are made of morphemes.

-

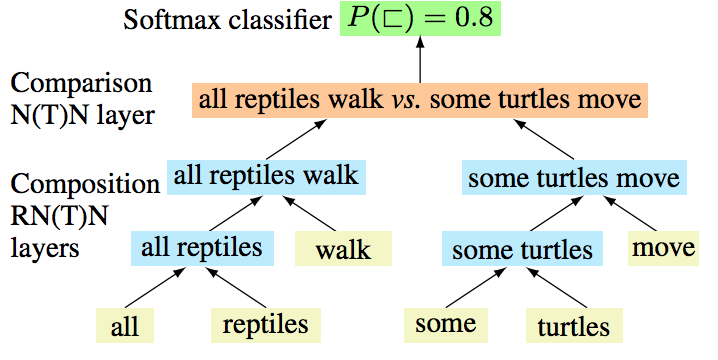

- Semantics:

-

- Traditionally: Lambda Calculus

- Carefully engineered functions

- Take as inputs specific other functions

- No notion of similarity or fuzziness of language

- DL:

- Every word and every phrase and every logical expression is a vector

- A Neural Network combines two vectors into one vector

- Bowman et al. 2014

- Traditionally: Lambda Calculus

NLP Tools

-

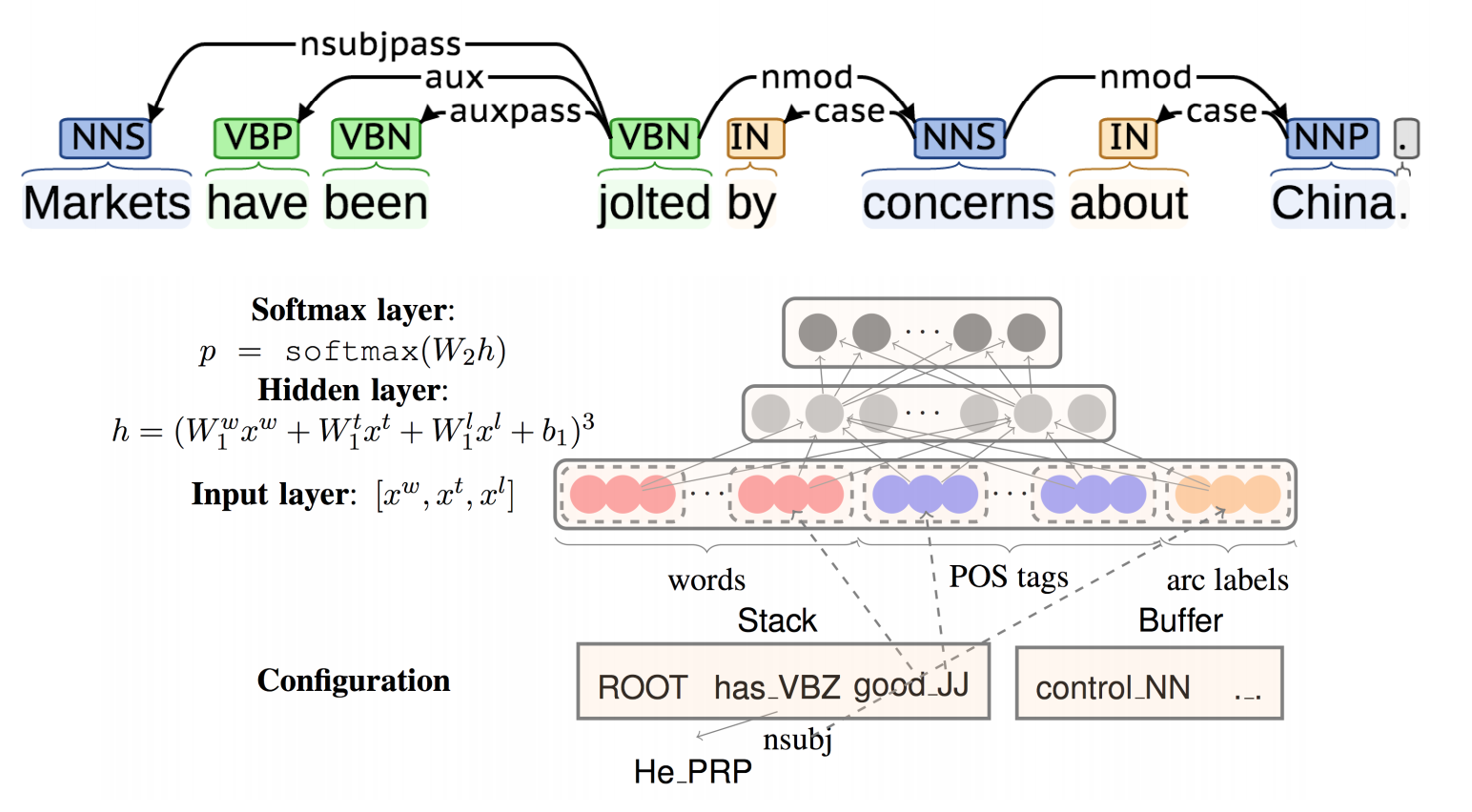

- Parsing for Sentence Structure:

- Neural networks can accurately determine the structure of sentences, supporting interpretation.

NLP Applications

-

- Sentiment Analysis:

-

- Traditional: Curated sentiment dictionaries combined with either bag-of-words representations (ignoring word order) or hand-designed negation features (ain’t gonna capture everything)

- DL: Same deep learning model that was used for morphology, syntax and logical semantics can be used;

RecursiveNN.

-

- Question Answering:

-

- Traditional: A lot of feature engineering to capture world and other knowledge, e.g., regular expressions, Berant et al. (2014)

- DL: Facts are stored in vectors. FILL-IN

FILL-IN.

-

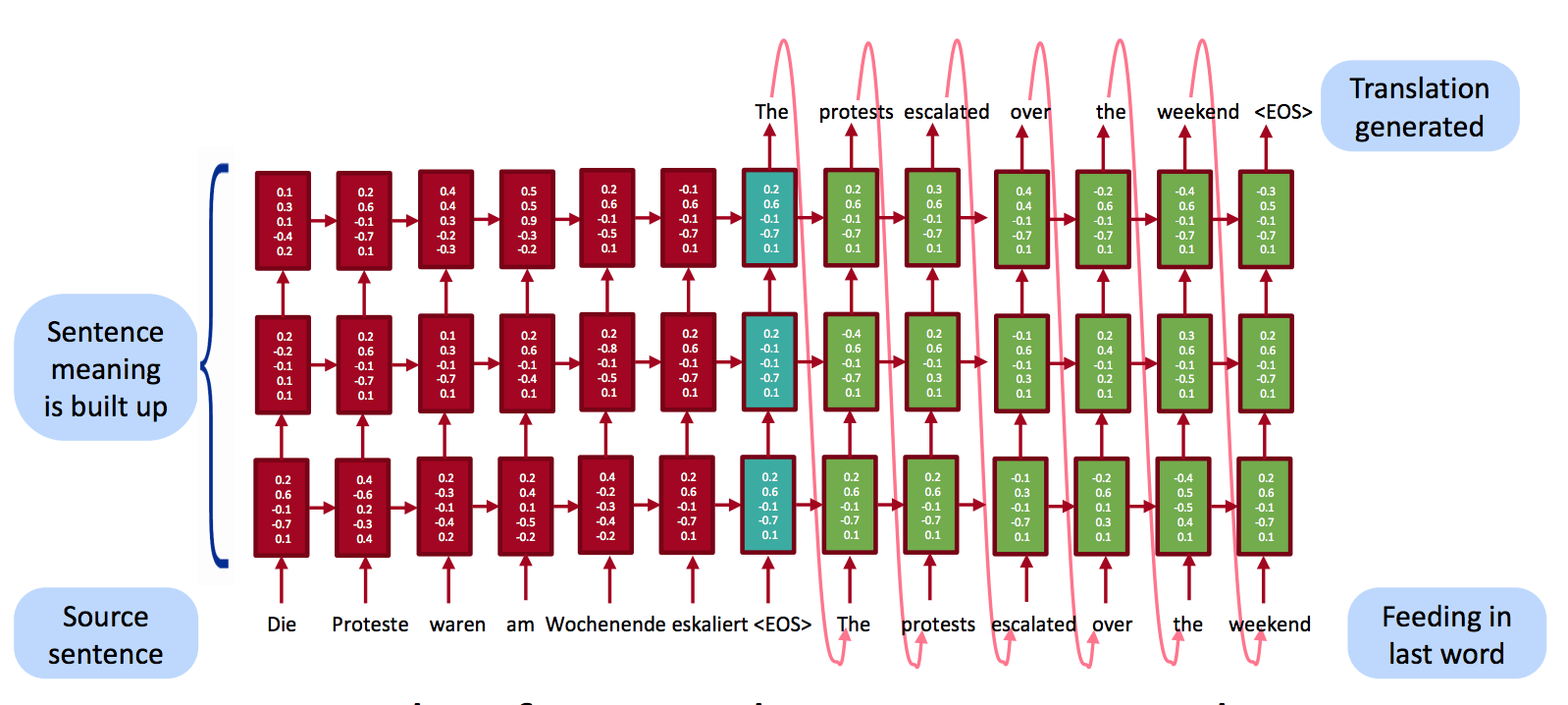

- Machine Translation:

-

- Traditional: Complex approaches with very high error rates.

- DL: Neural Machine Translation.

Source sentence is mapped to a vector, then the output sentence is generated.

[Sutskever et al. 2014, Bahdanau et al. 2014, Luong and Manning 2016]

FILL-IN.