Introduction and Definitions

-

- Computational Graph:

- Main Components:

- Graph Nodes:

are Operations which have any number of Inputs and Outputs.

They take in tensors and produce tensors. -

- Graph Edges:

- are Tensors (values) which flow between nodes.

-

- Components of the Graph::

-

- Variables: are stateful nodes which output their current value.

- State is retained across multiple executions of a graph.

- It is easy to restore saved values to variables

- They can be saved to the disk, during and after training

- Gradient updates, by default, will apply over all the variables in the graph

- Variables are, still, by “definition” operations

- They constitute mostly, Parameters

- Placeholders: are nodes whose value is fed in at execution time.

- They do not have initial values

- They are assigned a:

- data-type

- shape of a tensor

- They constitute mostly, Inputs and labels

- Mathematical Operations:

- Variables: are stateful nodes which output their current value.

-

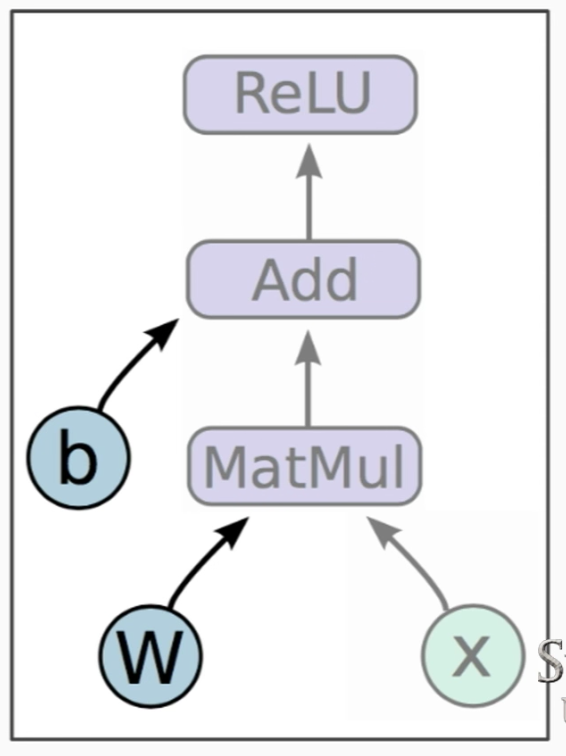

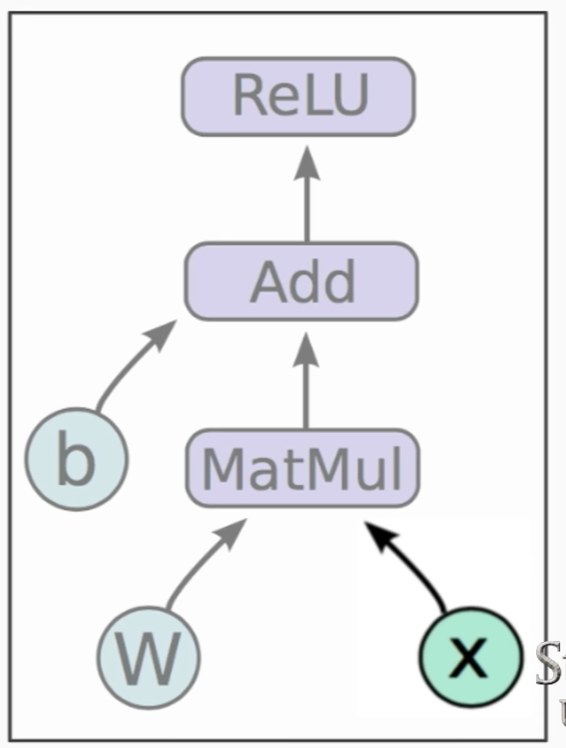

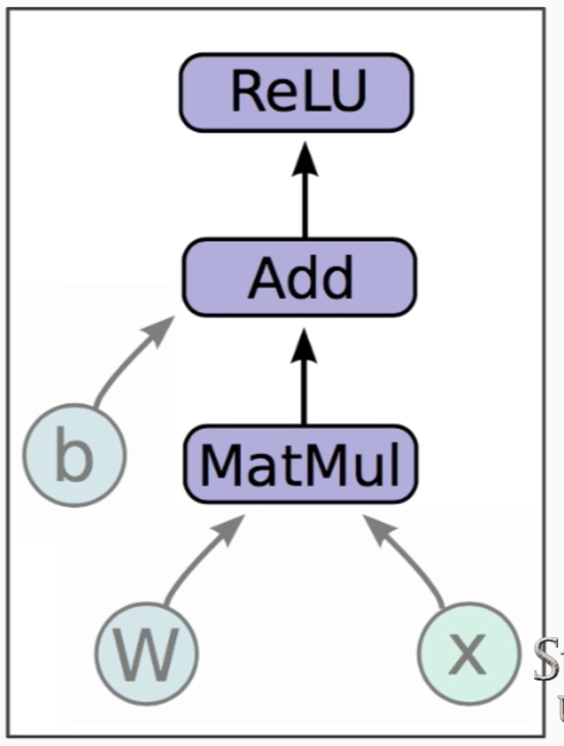

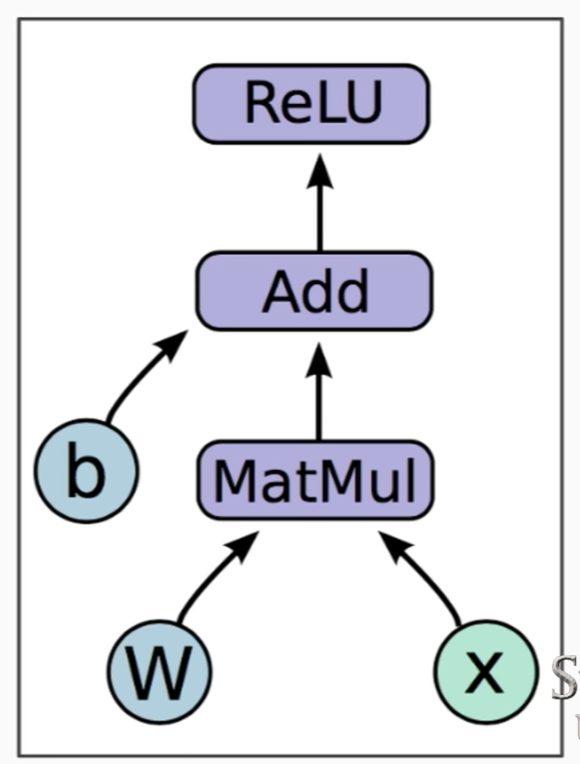

- Example:

- \(h = \text{ReLU}(Wx+b) \\

\rightarrow\)

Estimators

-

- Input Function:

- An input function is a function that returns a tf.data.Dataset object which outputs the following two-element tuple:

- features - A python dict in which:

- Each key is the name of a feature

- Each value is an array containing all of that features values

- label - An array containing the values of the label for every example.

def train_input_fn(features, labels, batch_size): """An input function for training""" # Convert the inputs to a Dataset. dataset = tf.data.Dataset.from_tensor_slices((dict(features), labels)) # Shuffle, repeat, and batch the examples. return dataset.shuffle(1000).repeat().batch(batch_size)

- features - A python dict in which:

- The job of the input function is to create the TF operations that generate data for the model

-

Model Function:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

- Asynchronous:

##

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

FOURTH

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

##

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

Commands and Notes

-

Asynchronous:

-

Asynchronous:

-

Asynchronous:

-

- TensorBoard:

File_writer = tf.summary.FileWriter('log_simple_graph', sess.graph)

tensorboard --logdir="path"

-

- Testing if GPU works:

import tensorflow as tf a = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[2, 3], name='a') b = tf.constant([1.0, 2.0, 3.0, 4.0, 5.0, 6.0], shape=[3, 2], name='b') c = tf.matmul(a, b) sess = tf.Session(config=tf.ConfigProto(log_device_placement=True)) print(sess.run(c))

-

- GPU Usage:

!nvidia-smi

-

Asynchronous:

- Asynchronous:{: .bodyContents6 #bodyContents68

Ten

-

- Session:

- To evaluate tensors, instantiate a tf.Session object, informally known as a session.

A session encapsulates the state of the TensorFlow runtime, and runs TensorFlow operations. -

- First, create a session:

sess = tf.Session() - Run the session:

sess.run()

It takes a dict of any tuples or any tensor.

It evaluates the tensor.

- First, create a session:

- Some TensorFlow functions return tf.Operations instead of tf.Tensors. The result of calling run on an Operation is None. You run an operation to cause a side-effect, not to retrieve a value. Examples of this include the initialization, and training ops demonstrated later.

-

- Asynchronous:

-

- Asynchronous:

-

- Asynchronous:

-

- Asynchronous:

-

- Asynchronous:

-

- Asynchronous:

-

- Asynchronous:

Tips and Tricks

-

- Saving the model:

-

- After the model is run, it uses the most recent checkpoint

- To run a different model with different architecture, use a different branch

-

- Asynchronous:

-

- Asynchronous:

-

- Asynchronous:

-

- Asynchronous:

-

- Asynchronous:

-

- Asynchronous:

-

- Asynchronous: