Table of Contents

Introduction: Deep Feedforward Neural Networks

-

(Deep) FeedForward Neural Networks:

For a classifier; given \(y=f^{\ast}(\boldsymbol{x})\), maps an input \(\boldsymbol{x}\) to a category \(y\).

An FNN defines a mapping \(\boldsymbol{y}=f(\boldsymbol{x} ; \boldsymbol{\theta})\) and learns the value of the parameters \(\boldsymbol{\theta}\) that result in the best function approximation.- FNNs are called networks because they are typically represented by composing together many different functions

- The model is associated with a DAG describing how the functions are composed together.

- Functions connected in a chain structure are the most commonly used structure of neural networks.

E.g. we might have three functions \(f^{(1)}, f^{(2)},\) and \(f^{(3)}\) connected in a chain, to form \(f(\boldsymbol{x})=f^{(3)}\left(f^{(2)}\left(f^{(1)}(\boldsymbol{x})\right)\right)\); being called the \(n\)-th Layer respectively.

- The overall length of the chain is the depth of the model.

- During training, we drive \(f(\boldsymbol{x})\) to match \(f^{\ast}(\boldsymbol{x})\).

The training data provides us with noisy, approximate examples of \(f^{\ast}(\boldsymbol{x})\) evaluated at different training points.

- FNNs from Linear Models:

Consider linear models biggest limitation: model capacity is limited to linear functions.

To extend linear models to represent non-linear functions of \(\boldsymbol{x}\) we can:- Apply the linear model not to \(\boldsymbol{x}\) itself but to a transformed input \(\phi(\boldsymbol{x})\), where \(\phi\) is a nonlinear transformation.

- Equivalently, apply the kernel trick to obtain nonlinear learning algorithm based on implicitly applying the \(\phi\) mapping.

We can think of \(\phi\) as providing a set of features describing \(\boldsymbol{x}\), or as providing a new representation for \(\boldsymbol{x}\).

Choosing the mapping \(\phi\):- Use a very generic \(\phi\), s.a. infinite-dimensional (RBF) kernel.

If \(\phi(\boldsymbol{x})\) is of high enough dimension, we can always have enough capacity to fit the training set, but generalization to the test set often remains poor.

Very generic feature mappings are usually based only on the principle of local smoothness and do not encode enough prior information to solve advanced problems. - Manually Engineer \(\phi\).

Requires decades of human effort and the results are usually poor and non-scalable. - The strategy of deep learning is to learn \(\phi\).

- We have a model:

$$y=f(\boldsymbol{x} ; \boldsymbol{\theta}, \boldsymbol{w})=\phi(\boldsymbol{x} ; \boldsymbol{\theta})^{\top} \boldsymbol{w}$$

We now have parameters \(\theta\) that we use to learn \(\phi\) from a broad class of functions, and parameters \(\boldsymbol{w}\) that map from \(\phi(\boldsymbol{x})\) to the desired output.

This is an example of a deep FNN, with \(\phi\) defining a hidden layer. - This approach is the only one of the three that gives up on the convexity of the training problem, but the benefits outweigh the harms.

- In this approach, we parametrize the representation as \(\phi(\boldsymbol{x}; \theta)\) and use the optimization algorithm to find the \(\theta\) that corresponds to a good representation.

- Advantages:

- Capturing the benefit of the first approach:

by being highly generic — we do so by using a very broad family \(\phi(\boldsymbol{x};\theta)\). - Capturing the benefit of the second approach:

Human practitioners can encode their knowledge to help generalization by designing families \(\phi(\boldsymbol{x}; \theta)\) that they expect will perform well.

The advantage is that the human designer only needs to find the right general function family rather than finding precisely the right function.

- Capturing the benefit of the first approach:

- We have a model:

Thus, we can motivate Deep NNs as a way to do automatic, non-linear feature extraction from the inputs.

This general principle of improving models by learning features extends beyond the feedforward networks to all models in deep learning.

FFNs are the application of this principle to learning deterministic mappings from \(\boldsymbol{x}\) to \(\boldsymbol{y}\) that lack feedback connections.

Other models, apply these principles to learning stochastic mappings, functions with feedback, and probability distributions over a single vector.Advantage and Comparison of Deep NNs:

- Linear classifier:

- Negative: Limited representational power

- Positive: Simple

- Shallow Neural network (Exactly one hidden layer):

- Positive: Unlimited representational power

- Negative: Sometimes prohibitively wide

- Deep Neural network:

- Positive: Unlimited representational power

- Positive: Relatively small number of hidden units needed

- Interpretation of Neural Networks:

It is best to think of feedforward networks as function approximation machines that are designed to achieve statistical generalization, occasionally drawing some insights from what we know about the brain, rather than as models of brain function.

Gradient-Based Learning

-

Stochastic Gradient Descent and FNNs:

Stochastic Gradient Descent applied to nonconvex loss functions has no convergence guarantees and is sensitive to the values of the initial parameters.Thus, for FNNs (since they have nonconvex loss functions):

- Initialize all weights to small random values.

- The biases may be initialized to zero or to small positive values.

-

Learning Conditional Distributions with Maximum Likelihood:

\[J(\boldsymbol{\theta})=-\mathbb{E}_{\mathbf{x}, \mathbf{y} \sim \hat{p}_{\text {data }}} \log p_{\text {model }}(\boldsymbol{y} | \boldsymbol{x}) \tag{6.12}\]

When Training using Maximum Likelihood:

The cost function is, simply, the negative log-likelihood.

Equivalently, the cross-entropy between the training data and the model distribution.- The specific form of the cost function changes from model to model, depending on the specific form of \(\log p_{\text {model}}\).

- The expansion of the above equation typically yields some terms that do not depend on the model parameters and may be discarded.

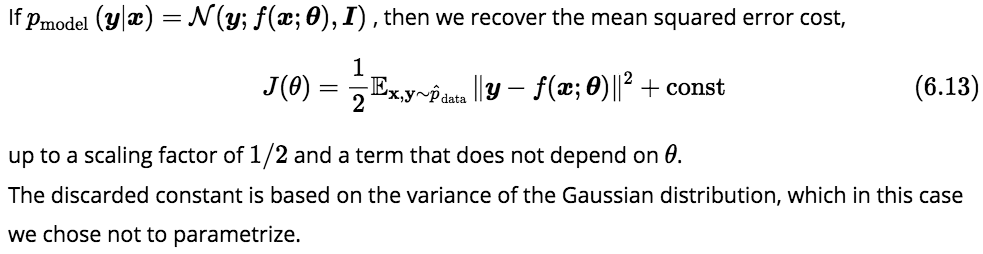

Maximum Likelihood and MSE:

The equivalence between maximum likelihood estimation with an output distribution and minimization of mean squared error holds not just for a linear model, but in fact, the equivalence holds regardless of the \(f(\boldsymbol{x} ; \boldsymbol{\theta})\) used to predict the mean of the Gaussian.

If $$p_{\text {model }}(\boldsymbol{y} | \boldsymbol{x})=\mathcal{N}(\boldsymbol{y} ; f(\boldsymbol{x} ; \boldsymbol{\theta}), \boldsymbol{I})$$ , then we recover the mean squared error cost,

$$J(\theta)=\frac{1}{2} \mathbb{E}_{\mathbf{x}, \mathbf{y} \sim \hat{p}_{\text {data }}}\|\boldsymbol{y}-f(\boldsymbol{x} ; \boldsymbol{\theta})\|^{2}+\mathrm{const} \tag{6.13}$$

up to a scaling factor of $$1/2$$ and a term that does not depend on $$\theta$$. The discarded constant is based on the variance of the Gaussian distribution, which in this case we chose not to parametrize.

Why derive the cost function from Maximum Likelihood?

It removes the burden of designing cost functions for each model.

Specifying a model \(p(\boldsymbol{y} | \boldsymbol{x})\) automatically determines a cost function \(\log p(\boldsymbol{y} | \boldsymbol{x})\).Cost Function Design - Desirable Properties:

- The gradient of the cost function must be large and predictable enough to serve as a good guide.

Functions that saturate (become very flat) undermine this objective because they make the gradient become very small. In many cases this happens because the activation functions used to produce the output of the hidden units or the output units saturate.

The negative log-likelihood helps to avoid this problem for many models. Several output units involve an exp function that can saturate when its argument is very negative. The log function in the negative log-likelihood cost function undoes the exp of some output units.

- Learning Conditional Statistics:

Instead of learning a full probability distribution \(p(\boldsymbol{y} | \boldsymbol{x} ; \boldsymbol{\theta})\), we often want to learn just one conditional statistic of \(\boldsymbol{y}\) given \(\boldsymbol{x}\).For example, we may have a predictor \(f(\boldsymbol{x} ; \boldsymbol{\theta})\) that we wish to employ to predict the mean of \(\boldsymbol{y}\).

The Cost Function as a Functional:

If we use a sufficiently powerful neural network, we can think of the neural network as being able to represent any function \(f\) from a wide class of functions, with this class being limited only by features such as continuity and boundedness rather than by having a specific parametric form. From this point of view, we can view the cost function as being a functional rather than just a function.

A Functional is a mapping from functions to real numbers.

We can thus think of learning as choosing a function rather than merely choosing a set of parameters.

We can design our cost functional to have its minimum occur at some specific function we desire.For example, we can design the cost functional to have its minimum lie on the function that maps \(\boldsymbol{x}\) to the expected value of \(\boldsymbol{y}\) given \(\boldsymbol{x}\).

Solving an optimization problem with respect to a function requires a mathematical tool called calculus of variations, described in section 19.4.2.

- Important Results in Optimization:

The calculus of variations can be used to derive the following two important results in Optimization:- Solving the optimization problem

$${\displaystyle f^{\ast}=\underset{f}{\arg \min } \: \mathbb{E}_{\mathbf{x}, \mathbf{y} \sim p_{\text {data }}}\|\boldsymbol{y}-f(\boldsymbol{x})\|^{2}} \tag{6.14}$$

yields

$${\displaystyle f^{\ast}(\boldsymbol{x})=\mathbb{E}_{\mathbf{y} \sim p_{\text {data }}(\boldsymbol{y} | \boldsymbol{x})}[\boldsymbol{y}] \tag{6.15}}$$

so long as this function lies within the class we optimize over.

In words: if we could train on infinitely many samples from the true data distribution, minimizing the MSE cost function would give a function that predicts the mean of \(\boldsymbol{y}\) for each value of \(\boldsymbol{x}\).Different cost functions give different statistics.

- Solving the optimization problem (commonly known as Mean Absolute Error)

$$f^{\ast}=\underset{f}{\arg \min } \: \underset{\mathbf{x}, \mathbf{y} \sim p_{\mathrm{data}}}{\mathbb{E}}\|\boldsymbol{y}-f(\boldsymbol{x})\|_ {1} \tag{6.16}$$

yields a function that predicts the median value of \(\boldsymbol{y}\) for each \(\boldsymbol{x}\), as long as such a function may be described by the family of functions we optimize over.

Drawbacks of MSE and MAE (mean absolute error):

They often lead to poor results when used with gradient-based optimization.

Some output units that saturate produce very small gradients when combined with these cost functions.

This is one reason that the cross-entropy cost function is more popular than MSE or MAE, even when it is not necessary to estimate an entire distribution \(p(\boldsymbol{y} | \boldsymbol{x})\). - Solving the optimization problem

Output Units

-

Introduction:

The choice of cost function is tightly coupled with the choice of output unit. Most of the time, we simply use the cross-entropy between the data distribution and the model distribution.

Thus, the choice of how to represent the output then determines the form of the cross-entropy function.Throughout this analysis, we suppose that:

The FNN provides a set of hidden features defined by \(\boldsymbol{h}=f(\boldsymbol{x} ; \boldsymbol{\theta})\).

The role of the output layer, thus, is to provide some additional transformation from the features to complete the task the FNN is tasked with. -

Linear Units:

Linear Units are a simple kind of output units, based on an affine transformation with no non-linearity.

Mathematically, given features \(\boldsymbol{h}\), a layer of linear output units produces a vector \(\hat{\boldsymbol{y}}=\boldsymbol{W}^{\top} \boldsymbol{h}+\boldsymbol{b}\).Application: used for Gaussian Output Distributions.

Linear output layers are often used to produce the mean of a conditional Gaussian Distributions:$$p(\boldsymbol{y} | \boldsymbol{x})=\mathcal{N}(\boldsymbol{y} ; \hat{\boldsymbol{y}}, \boldsymbol{I}) \tag{6.17}$$

In this case, maximizing the log-likelihood is equivalent to minimizing the MSE.

Learning the Covariance of the Gaussian:

The MLE framework makes it straightforward to:

- Learn the covariance of the Gaussian too

- Make the covariance of the Gaussian be a function of the input

However, the covariance must be constrained to be a positive definite matrix for all inputs.It is difficult to satisfy such constraints with a linear output layer, so typically other output units are used to parametrize the covariance.

Approaches to modeling the covariance are described shortly, in section 6.2.2.4.

Saturation:

Because linear units do not saturate, they pose little difficulty for gradient- based optimization algorithms and may be used with a wide variety of optimization algorithms. -

Sigmoid Units:

Sigmoid UnitsBinary Classification: is a classification problem over two classes. It requires predicting the value of a binary variable \(y\). It is one of many tasks requiring that.

The MLE approach is to define a Bernoulli distribution over \(y\) conditioned on \(\boldsymbol{x}\).

A Bernoulli distribution is defined by just a single number.

The Neural Network needs to predict only \(P(y=1 \vert \boldsymbol{x})\).