- Learning (wiki)

- Hebbian Theory (wiki)

- Learning Exercises

- Neuronal Dynamics - From single neurons to networks and models of cognition

- Theoretical Impediments to ML With Seven Sparks from the Causal Revolution (J Pearl Paper!)

- ML Intro (Slides!)

- A Few Useful Things to Know About Machine Learning (Blog!)

- Introduction to Intelligence, Learning, and AI (Blog)

- What’s the difference between learning statistical properties of a dataset and understanding?

- Statistical properties tell you the correlations (associations) between things , which enables you to predict the future. Understanding is the ability to imagine counterfactuals (things that could happen) in order to plan for and reflect on the past, future and present.

- In short, learning statistical properties only lets you passively predict the external world (prediction). Understanding lets you actively interact with it via formulation of symbolic plans representing the world (including yourself) as objects, events and relations (reflection)

- Can we have real understanding if we confined ourselves in language? I think even for human, language understanding means knowing what each word would mean in the world (physical or non-physical). Right?

Learning

- Learning:

Learning is the process of acquiring new, or modifying existing, knowledge, behaviors, skills, values, or preferences.

- Asynchronous:

Types of Learning

-

Hebbian (Associative) Learning:

Hebbian/Associative Learning is the process by which a person or animal learns an association between two stimuli or events, in which, simultaneous activation of cells leads to pronounced increases in synaptic strength between those cells.Hebbian Learning in Artificial Neural Networks:

From the pov of ANNs, Hebb’s principle can be described as a method of determining how to alter the weights between model neurons.- The weight between two neurons:

- Increases if the two neurons activate simultaneously,

- Reduces if they activate separately.

- Nodes that tend to be either both positive or both negative at the same time have strong positive weights, while those that tend to be opposite have strong negative weights.

Hebb’s Rule:

The change in the $i$ th synaptic weight $w_{i}$ is equal to a learning rate $\eta$ times the $i$ th input $x_{i}$ times the postsynapic response $y$:$$\Delta w_{i}=\eta x_{i} y$$

where in the case of a linear neuron:

$$y=\sum_{j} w_{j} x_{j}$$

Notes:

- It is regarded as the neuronal basis of unsupervised learning.

- The weight between two neurons:

-

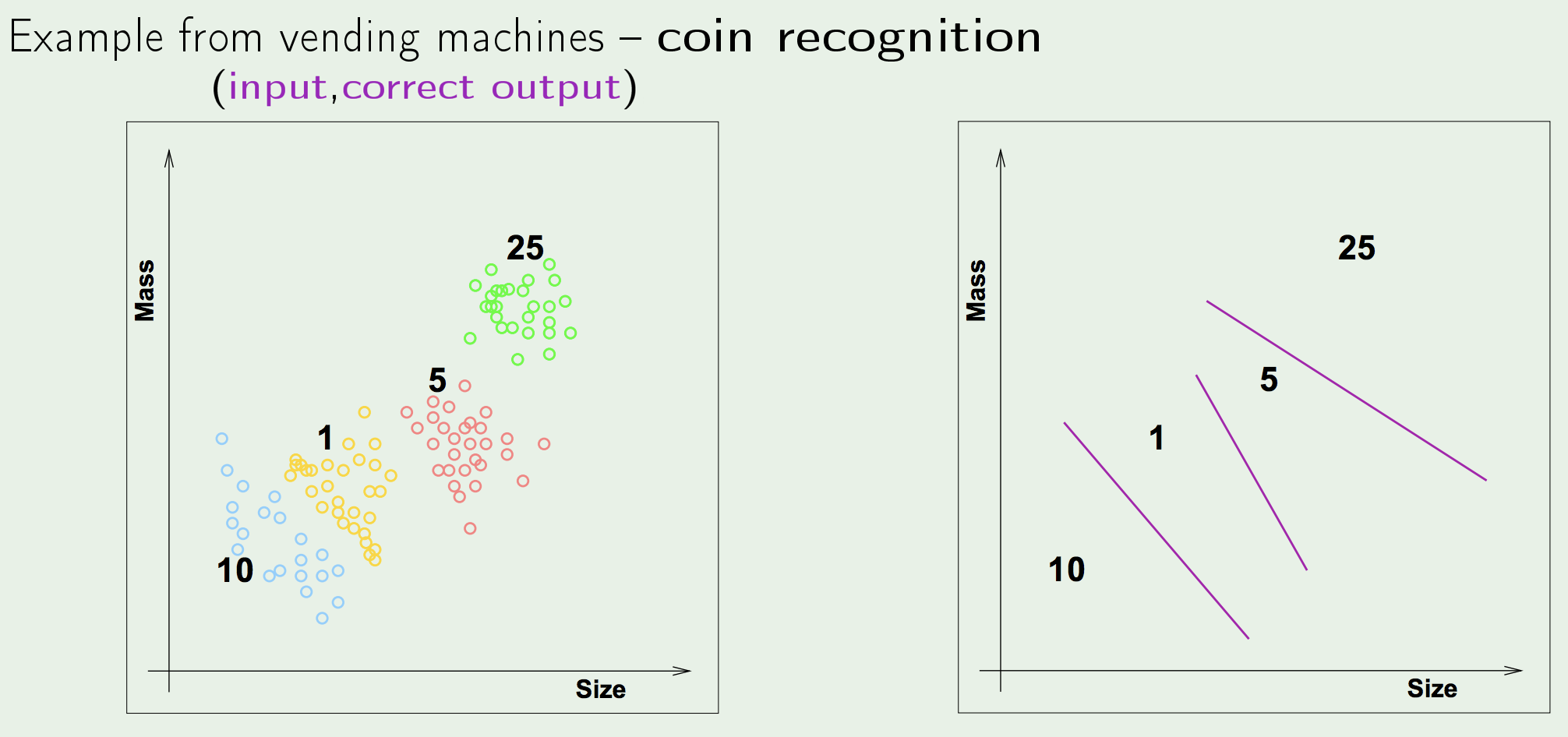

Supervised Learning: Supervised Learning: the task of learning a function that maps an input to an output based on example input-output pairs.

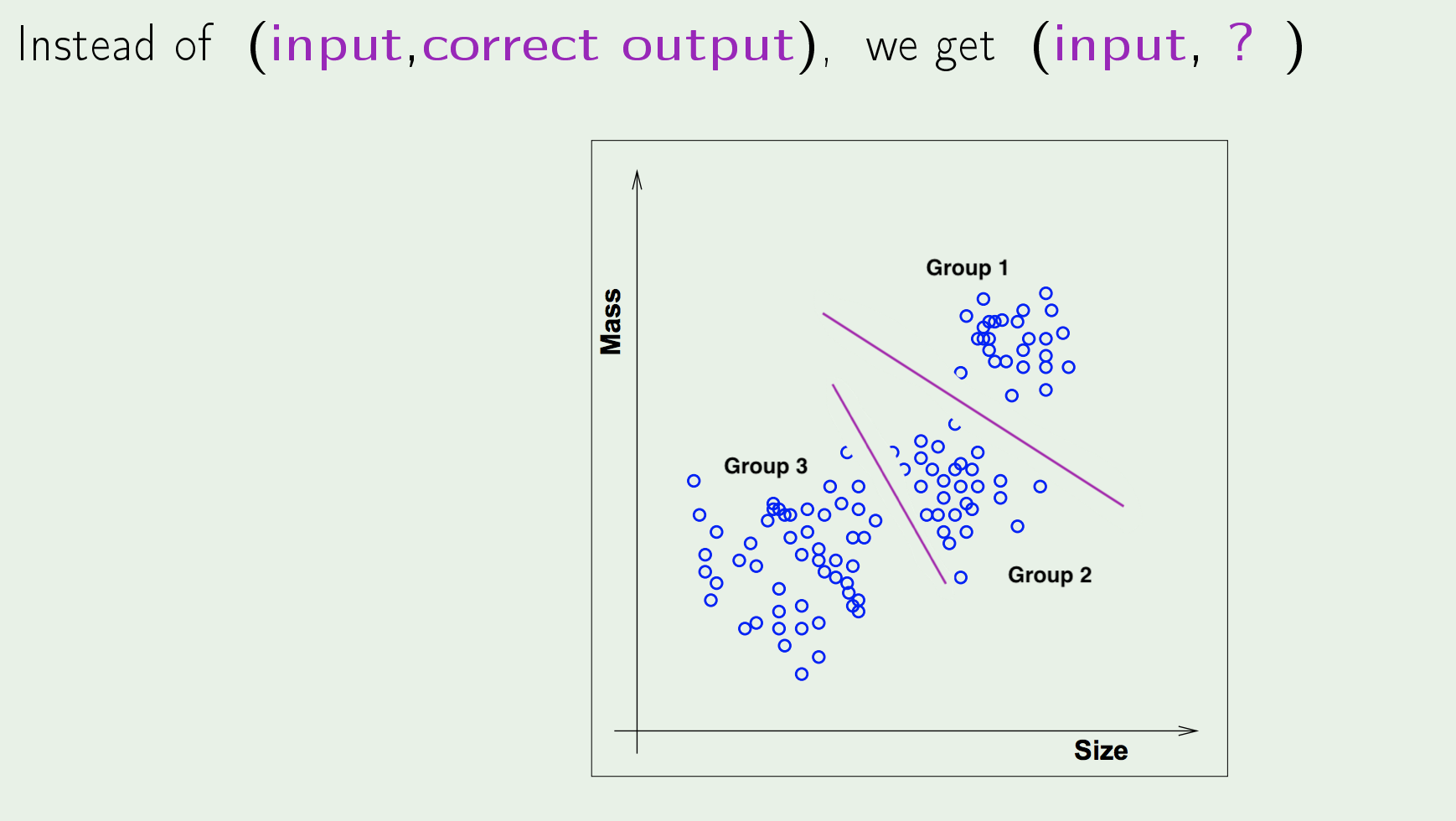

- Unsupervised Learning:

Unsupervised Learning: the task of making inferences, by learning a better representation, from some datapoints that do not have any labels associated with them.

Unsupervised Learning is another name for Hebbian Learning

- Clustering

- hierarchical clustering

- k-means

- mixture models

- DBSCAN

- Anomaly Detection: Local Outlier Factor

- Neural Networks

- Autoencoders

- Deep Belief Nets

- Hebbian Learning

- Generative Adversarial Networks

- Self-organizing map

- Approaches for learning latent variable models such as

- Expectation–maximization algorithm (EM)

- Method of moments

- Blind signal separation techniques

- Principal component analysis

- Independent component analysis

- Non-negative matrix factorization

- Singular value decomposition

- Clustering

-

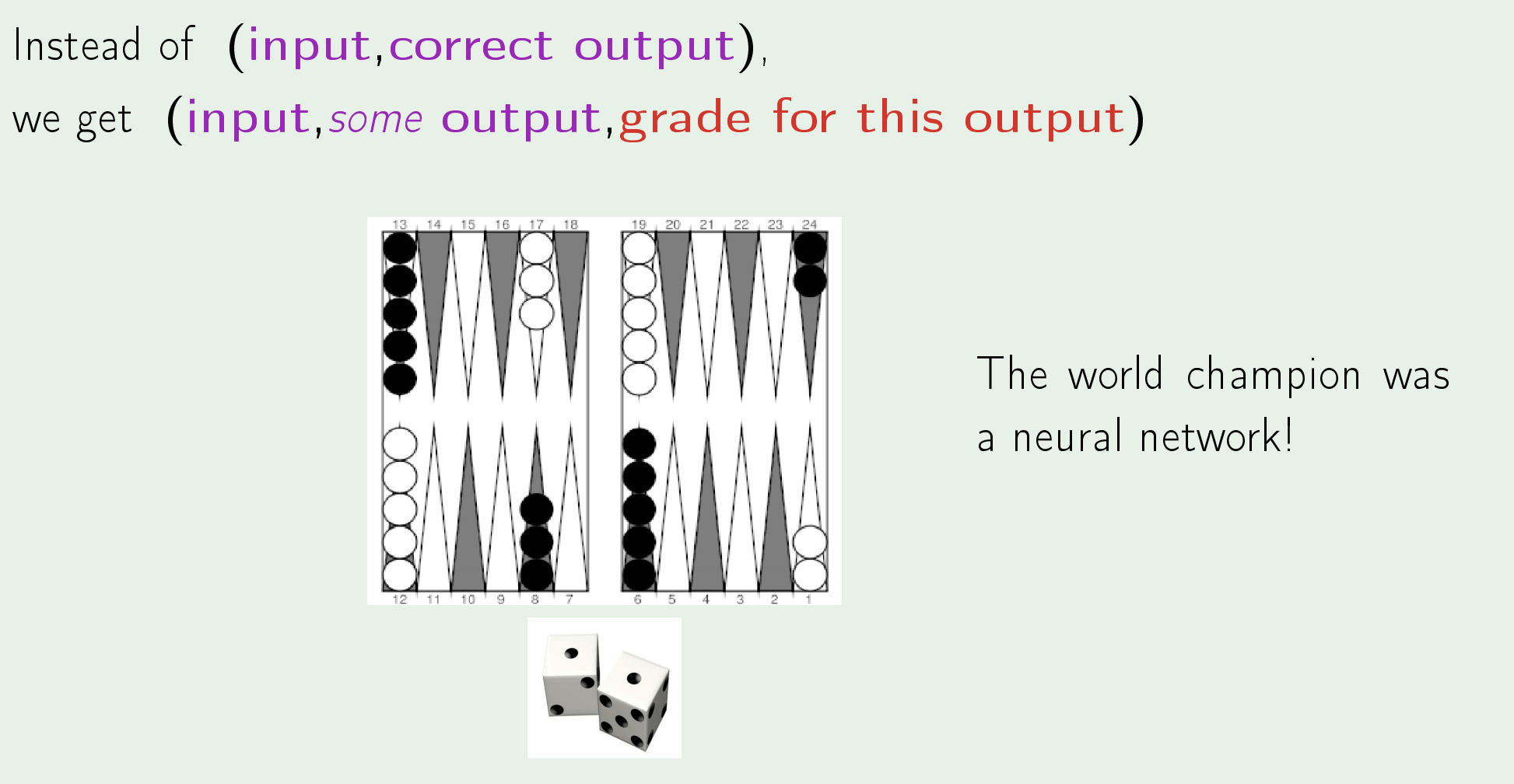

Reinforcement Learning:

Reinforcement Leaning: the task of learning how software agents ought to take actions in an environment so as to maximize some notion of cumulative reward.

-

Semi-supervised Learning:

-

Zero-Shot Learning:

-

Transfer Learning:

-

Multitask Learning:

- Domain Adaptation:

Theories of Learning

Reasoning and Inference

-

Logical Reasoning:

- Inductive Learning is the process of using observations to draw conclusions

- It is a method of reasoning in which the premises are viewed as supplying some evidence for the truth of the conclusion.

- It goes from specific to general (“bottom-up logic”).

- The truth of the conclusion of an inductive argument may be probable, based upon the evidence given.

- Deductive Learning is the process of using conclusions to form observations.

- It is the process of reasoning from one or more statements (premises) to reach a logically certain conclusion.

- It goes from general to specific (“top-down logic”).

- The conclusions reached (“observations”) are necessarily True.

- Abductive Learning is a form of inductive learning where we use observations to draw the simplest and most likely conclusions.

It can be understood as “inference to the best explanation”.

It is used by Sherlock Holmes.

In Mathematical Modeling (ML):

In the context of Mathematical Modeling the three kinds of reasoning can be described as follows:- The construction/creation of the structure of the model is abduction.

- Assigning values (or probability distributions) to the parameters of the model is induction.

- Executing/running the model is deduction.

- Inductive Learning is the process of using observations to draw conclusions

- Inference:

Inference has two definitions:- A conclusion reached on the basis of evidence and reasoning.

- The process of reaching such a conclusion.

-

Transductive Inference/Learning (Transduction):

The Goal of Transductive Learning is to “simply” add labels to the unlabeled data by exploiting labelled samples.

While, the goal of inductive learning is to infer the correct mapping from \(X\) to \(Y\).Transductive VS Semi-supervised Learning:

Transductive Learning is only concerned with the unlabeled data.Transductive Learning:

Inductive Learning:

Notes: