Explanation

KMeans Full Treatment

KMeans and EM-Algorithms

K-Means (code, my github)

K-Means

K-Means:

It is a method for cluster analysis. It aims to partition \(n\) observations into \(k\) clusters in which each observation belongs to the cluster with the nearest mean. It results in a partitioning of the data space into Voronoi Cells.

IDEA:

- Minimizes the aggregate Intra-Cluster distance

- Equivalent to minimizing the Variance

- Thus, it finds k-clusters with minimum aggregate Variance.

Formal Description:

Given a set of observations \(\left(\mathbf{x}_{1}, \mathbf{x} _{2}, \ldots, \mathbf{x}_{n}\right)\), \(\mathbf{x}_ i \in \mathbb{R}^d\), the algorithm aims to partition the \(n\) observations into \(k\) sets \(\mathbf{S}=\left\{S_{1}, S_{2}, \ldots, S_{k}\right\}\) so as to minimize the intra-cluster Sum-of-Squares (i.e. variance).

The Objective:

$$\underset{\mathbf{S}}{\arg \min } \sum_{i=1}^{k} \sum_{\mathbf{x} \in S_{i}}\left\|\mathbf{x}-\boldsymbol{\mu}_{i}\right\|^{2}=\underset{\mathbf{S}}{\arg \min } \sum_{i=1}^{k}\left|S_{i}\right| \operatorname{Var} S_{i}$$

where \(\boldsymbol{\mu}_i\) is the mean of points in \(S_i\).

Algorithm:

- Choose two random points, call them “Centroids”

- Assign the closest \(N/2\) points (Euclidean-wise) to each of the Centroids

- Compute the mean of each “group”/class of points

- Re-Assign the centroids to the newly computed Means ↑

- REPEAT!

The “assignment” step is referred to as the “expectation step”, while the “update step” is a maximization step, making this algorithm a variant of the generalized expectation-maximization algorithm.

Complexity:

The original formulation of the problem is NP-Hard; however, EM algorithms (specifically, Coordinate-Descent) can be used as efficient heuristic algorithms that converge quickly to good local minima.

Lloyds algorithm (and variants) have \({\displaystyle \mathcal{O}(nkdi)}\) runtime.

Convergence:

Guaranteed to converge after a finite number of iterations

-

Proof:

The Algorithm Minimizes a monotonically decreasing, Non-Negative Energy function on a finite Domain:

By Monotone Convergence Theorem the objective Value Converges.

Optimality:

- Locally optimal: due to convergence property

- Non-Globally optimal:

- The objective function is non-convex

- Moreover, coordinate Descent doesn’t converge to global minimum on non-convex functions.

Objective Function:

$$J(S, \mu)= \sum_{i=1}^{k} \sum_{\mathbf{x} \in S_{i}} \| \mathbf{x} -\mu_i \|^{2}$$

Optimization Objective:

$$\min _{\mu} \min _{S} \sum_{i=1}^{k} \sum_{\mathbf{x} \in S_{i}}\left\|\mathbf{x} -\mu_{i}\right\|^{2}$$

Coordinate Descent:

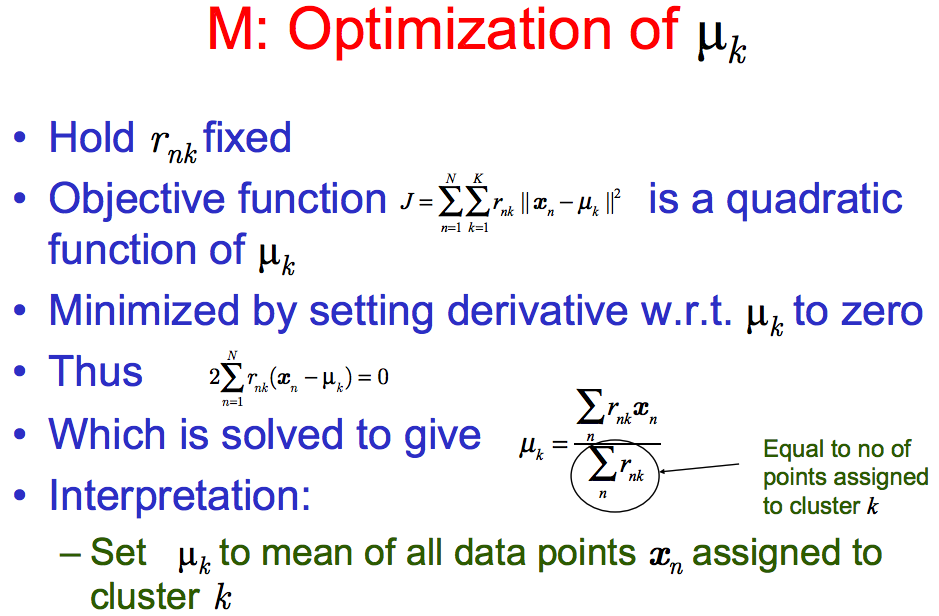

- Fix \(S = \hat{S}\), optimize \(\mu\):

$$\begin{aligned} & \min _{\mu} \sum_{i=1}^{k} \sum_{\mathbf{x} \in \hat{S}_{i}}\left\|\mu_{i}-x_{j}\right\|^{2}\\ =& \sum_{i=1}^{k} \min _{\mu_i} \sum_{\mathbf{x} \in \hat{S}_{i}}\left\|\mathbf{x} - \mu_{i}\right\|^{2} \end{aligned}$$

- MLE:

$$\min _{\mu_i} \sum_{\mathbf{x} \in \hat{S}_{i}}\left\|\mathbf{x} - \mu_{i}\right\|^{2}$$

\(\implies\)

$${\displaystyle \hat{\mu_i} = \dfrac{\sum_{\mathbf{x} \in \hat{S}_ {i}} \mathbf{x}}{\vert\hat{S}_ i\vert}}$$

- MLE:

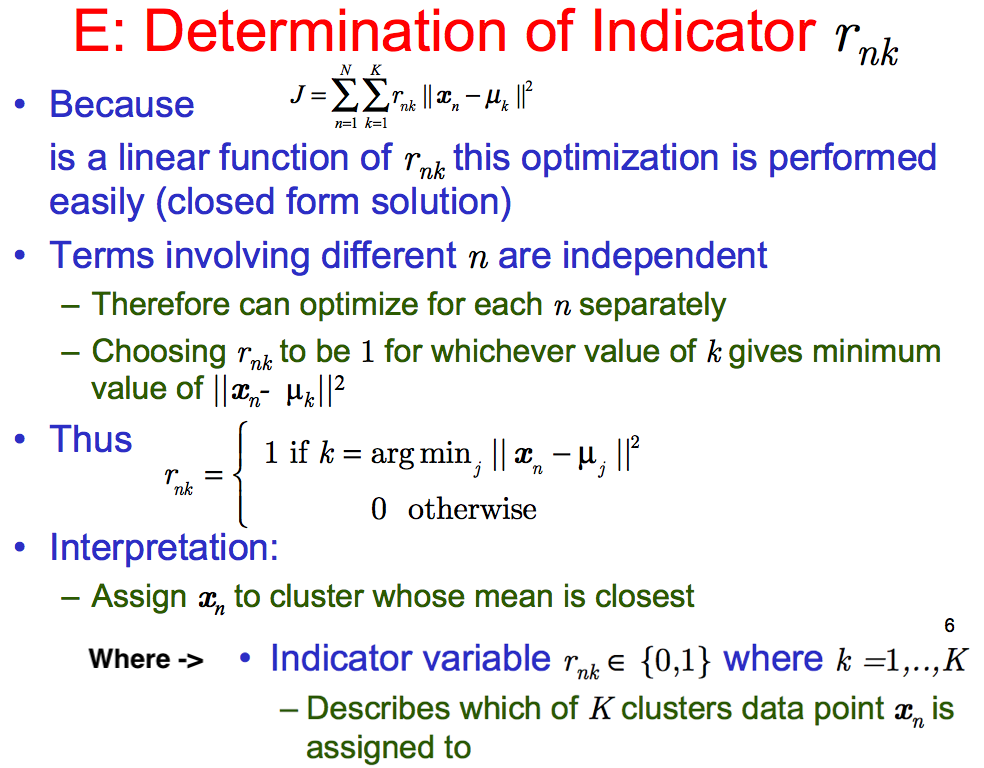

- Fix \(\mu_i = \hat{\mu_i}, \forall i\), optimize \(S\)1:

$$\arg \min _{S} \sum_{i=1}^{k} \sum_{\mathbf{x} \in S_{i}}\left\|\mathbf{x} - \hat{\mu_{i}}\right\|^{2}$$

\(\implies\)

$$S_{i}^{(t)}=\left\{x_{p} :\left\|x_{p}-m_{i}^{(t)}\right\|^{2} \leq\left\|x_{p}-m_{j}^{(t)}\right\|^{2} \forall j, 1 \leq j \leq k\right\}$$

- MLE:

- MLE:

- Choosing the number of clusters \(k\):

- Elbow Method

- Silhouette function

- BIC: Bayesian Information Criterion (A much better method)

-

The optimization here is an \(\arg \min\) not a \(\min\), since we are optimizing for \(i\) over \(S_i\). ↩