Gradient-Based Optimization

- Define Gradient Methods:

Gradient Methods are algorithms for solving (optimization) minimization problems of the form:$$\min_{x \in \mathbb{R}^{n}} f(x)$$

by doing local search (~ hill-climbing) with the search directions defined by the gradient of the function at the current point.

- Give examples of Gradient-Based Algorithms:

- Gradient Descent: minimizes arbitrary differentiable functions

- Conjugate Gradient: minimizes sparse linear systems w/ symmetric & PD matrices

- Coordinate Descent: minimizes functions of two variables

- What is Gradient Descent:

GD is a first order, iterative algorithm to minimize an objective function \(J(\theta)\) parametrized by a models parameters \(\theta \in \mathbb{R}^d\) by updating the parameters in the opposite direction of the gradient of the objective function \(\nabla_{\theta} J(\theta)\) w.r.t. to the parameters. -

Explain it intuitively:

1) Local Search from a starting location on a hill

2) Feel around how a small movement/step around your location would change the height of the surrounding hill (is the ground higher or lower)

3) Make the movement/step consistent as a small fixed step along some direction

4) Measure the steepness of the hill at the new location in the chosen direction

5) Do so by Approximating the steepness with some local information

6) Measure the change in steepness from your current point to the new point

6) Find the direction that decreases the steepness the most

\(\iff\)1) Local Search from an initial point \(x_0\) on a function

2) Explore the value of the function at different small nudges around \(x_0\)

3) Make the nudges consistent as a small fixed step \(\delta\) along a normalized direction \(\hat{\boldsymbol{u}}\)

4) Evaluate the function at the new location \(x_0 + \delta \hat{\boldsymbol{u}}\)

5) Do so by Approximating the function w/ first-order information (Taylor expansion)

6) Measure the change in value of the objective \(\Delta f\), from current point to the new point

7) Find the direction \(\hat{\boldsymbol{u}}\) that minimizes the function the most - Give its derivation:

1) Start at \(\boldsymbol{x} = x_0\)

2) We would like to know how would a small change in \(\boldsymbol{x}\), namely \(\Delta \boldsymbol{x}\) would affect the value of the function \(f(x)\). This will allow us to evaluate the function:$$f(\mathbf{x}+\Delta \mathbf{x})$$

to find the direction that makes \(f\) decrease the fastest

3) Let’s set up \(\Delta \boldsymbol{x}\), the change in \(\boldsymbol{x}\), as a fixed step \(\delta\) along some normalized direction \(\hat{\boldsymbol{u}}\):$$\Delta \boldsymbol{x} = \delta \hat{\boldsymbol{u}}$$

4) Evaluate the function at the new location:

$$f(\mathbf{x}+\Delta \mathbf{x}) = f(\mathbf{x}+\delta \hat{\boldsymbol{u}})$$

5) Using the first-order approximation:

$$\begin{aligned} f(\mathbf{x}+\delta \hat{\boldsymbol{u}}) &\approx f({\boldsymbol{x}}_0) + \left(({\boldsymbol{x}}_0 - \delta \hat{\boldsymbol{u}}) - {\boldsymbol{x}}_0 \right)^T \nabla_{\boldsymbol{x}} f({\boldsymbol{x}}_0) \\ &\approx f(x_0) + \delta \hat{\boldsymbol{u}}^T \nabla_x f(x_0) \end{aligned}$$

6) The change in \(f\) is:

$$\begin{aligned} \Delta f &= f(\boldsymbol{x}_ 0 + \Delta \boldsymbol{x}) - f(\boldsymbol{x}_ 0) \\ &= f(\boldsymbol{x}_ 0 + \delta \hat{\boldsymbol{u}}) - f(\boldsymbol{x}_ 0)\\ &= \delta \nabla_x f(\boldsymbol{x}_ 0)^T\hat{\boldsymbol{u}} + \mathcal{O}(\delta^2) \\ &= \delta \nabla_x f(\boldsymbol{x}_ 0)^T\hat{\boldsymbol{u}} \\ &\geq -\delta\|\nabla f(\boldsymbol{x}_ 0)\|_ 2 \end{aligned}$$

where

$$\nabla_x f(\boldsymbol{x}_ 0)^T\hat{\boldsymbol{u}} \in \left[-\|\nabla f(\boldsymbol{x}_ 0)\|_ 2, \|\nabla f(\boldsymbol{x}_ 0)\|_ 2\right]$$

since \(\hat{\boldsymbol{u}}\) is a unit vector; either aligned with \(\nabla_x f(\boldsymbol{x}_ 0)\) or in the opposite direction; it contributes nothing to the magnitude of the dot product.

7) So, the \(\hat{\boldsymbol{u}}\) that changes the above inequality to equality, achieves the largest negative value (moves the most downhill). That vector \(\hat{\boldsymbol{u}}\) is, then, the one in the negative direction of \(\nabla_x f(\boldsymbol{x}_ 0)\); the opposite direction of the gradient.

\(\implies\)$$\hat{\boldsymbol{u}} = - \dfrac{\nabla_x f(x)}{\|\nabla_x f(x)\|_ 2}$$

Finally, The method of steepest/gradient descent proposes a new point to decrease the value of \(f\):

$$\boldsymbol{x}^{\prime}=\boldsymbol{x}-\epsilon \nabla_{\boldsymbol{x}} f(\boldsymbol{x})$$

where \(\epsilon\) is the learning rate, defined as:

$$\epsilon = \dfrac{\delta}{\left\|\nabla_{x} f(x)\right\|_ {2}}$$

- What is the learning rate?

Learning rate is a hyper-parameter that controls how much we are adjusting the weights of our network with respect the loss gradient; defined as:$$\epsilon = \dfrac{\delta}{\left\|\nabla_{x} f(x)\right\|_ {2}}$$

- Where does it come from?

The learning rate comes from a modification of the step-size in the GD derivation.

We get the learning rate by employing a simple idea:

We have a fixed step-size \(\delta\) that dictated how much we should be moving in the direction of steepest descent. However, we would like to keep the step-size from being too small or overshooting. The idea is to make the step-size proportional to the magnitude of the gradient (i.e. some constant multiplied by the magnitude of the gradient):$$\delta = \epsilon \left\|\nabla_{x} f(x)\right\|_ {2}$$

If we do so, we get a nice cancellation as follows:

$$\begin{aligned}\Delta \boldsymbol{x} &= \delta \hat{\boldsymbol{u}} \\ &= -\delta \dfrac{\nabla_x f(x)}{\|\nabla_x f(x)\|_ 2} \\ &= - \epsilon \left\|\nabla_{x} f(x)\right\|_ {2} \dfrac{\nabla_x f(x)}{\|\nabla_x f(x)\|_ 2} \\ &= - \dfrac{\epsilon \left\|\nabla_{x} f(x)\right\|_ {2}}{\|\nabla_x f(x)\|_ 2} \nabla_x f(x) \\ &= - \epsilon \nabla_x f(x) \end{aligned}$$

where now we have a fixed learning rate instead of a fixed step-size.

- How does it relate to the step-size?

[Answer above] - We go from having a fixed step-size to [blank]:

a fixed learning rate.

- What is its range?

\([0.0001, 0.4]\) - How do we choose the learning rate?

- Choose a starting lr from the range \([0.0001, 0.4]\) and adjust using cross-validation (lr is a HP).

- Do

- Set it to a small constant

- Smooth Functions (non-NN):

- Line Search: evaluate \(f\left(\boldsymbol{x}-\epsilon \nabla_{\boldsymbol{x}} f(\boldsymbol{x})\right)\) for several values of \(\epsilon\) and choose the one that results in the smallest objective value.

E.g. Secant Method, Newton-Raphson Method (may need Hessian, hard for large dims) - Trust Region Method

- Line Search: evaluate \(f\left(\boldsymbol{x}-\epsilon \nabla_{\boldsymbol{x}} f(\boldsymbol{x})\right)\) for several values of \(\epsilon\) and choose the one that results in the smallest objective value.

- Non-Smooth Functions (non-NN):

- Direct Line Search

E.g. golden section search

- Direct Line Search

- Grid Search: is simply an exhaustive searching through a manually specified subset of the hyperparameter space of a learning algorithm. It is guided by some performance metric, typically measured by cross-validation on the training set or evaluation on a held-out validation set.

- Population-Based Training (PBT): is an elegant implementation of using a genetic algorithm for hyper-parameter choice.

- Bayesian Optimization: is a global optimization method for noisy black-box functions.

- Use larger lr and use a learning rate schedule

- Compare Line Search vs Trust Region:

Trust-region methods are in some sense dual to line-search methods: trust-region methods first choose a step size (the size of the trust region) and then a step direction, while line-search methods first choose a step direction and then a step size. - Learning Rate Schedule:

- Define:

A learning rate schedule changes the learning rate during learning and is most often changed between epochs/iterations. This is mainly done with two parameters: decay and momentum. - List Types:

- Time-based learning schedules alter the learning rate depending on the learning rate of the previous time iteration. Factoring in the decay the mathematical formula for the learning rate is:

$${\displaystyle \eta_{n+1}={\frac {\eta_{n}}{1+dn}}}$$

where \(\eta\) is the learning rate, \(d\) is a decay parameter and \(n\) is the iteration step.

- Step-based learning schedules changes the learning rate according to some pre defined steps:

$${\displaystyle \eta_{n}=\eta_{0}d^{floor({\frac {1+n}{r}})}}$$

where \({\displaystyle \eta_{n}}\) is the learning rate at iteration \(n\), \(\eta_{0}\) is the initial learning rate, \(d\) is how much the learning rate should change at each drop (0.5 corresponds to a halving) and \(r\) corresponds to the droprate, or how often the rate should be dropped (\(10\) corresponds to a drop every \(10\) iterations). The floor function here drops the value of its input to \(0\) for all values smaller than \(1\).

- Exponential learning schedules are similar to step-based but instead of steps a decreasing exponential function is used. The mathematical formula for factoring in the decay is:

$$ {\displaystyle \eta_{n}=\eta_{0}e^{-dn}}$$

where \(d\) is a decay parameter.

- Time-based learning schedules alter the learning rate depending on the learning rate of the previous time iteration. Factoring in the decay the mathematical formula for the learning rate is:

- Define:

- Where does it come from?

-

Describe the convergence of the algorithm:

Gradient Descent converges when every element of the gradient is zero, or very close to zero within some threshold.With certain assumptions on \(f\) (convex, \(\nabla f\) lipschitz) and particular choices of \(\epsilon\) (chosen via line-search etc.), convergence to a local minimum can be guaranteed.

Moreover, if \(f\) is convex, all local minima are global minimia, so convergence is to the global minimum. - How does GD relate to Euler?

Gradient descent can be viewed as applying Euler’s method for solving ordinary differential equations \({\displaystyle x'(t)=-\nabla f(x(t))}\) to a gradient flow. - List the variants of GD:

- Batch Gradient Descent

- Stochastic GD

- Mini-Batch GD

- How do they differ? Why?:

There are three variants of gradient descent, which differ in the amount of data used to compute the gradient. The amount of data imposes a trade-off between the accuracy of the parameter updates and the time it takes to perform the update.

- BGD:

Batch Gradient Descent AKA Vanilla Gradient Descent, computes the gradient of the objective wrt. the parameters \(\theta\) for the entire dataset:$$\theta=\theta-\epsilon \cdot \nabla_{\theta} J(\theta)$$

- Properties:

- Since we need to compute the gradient for the entire dataset for each update, this approach can be very slow and is intractable for datasets that can’t fit in memory.

- Moreover, batch-GD doesn’t allow for an online learning approach.

- Properties:

- SGD:

SGD performs a parameter update for each data-point:$$\theta=\theta-\epsilon \cdot \nabla_{\theta} J\left(\theta ; x^{(i)} ; y^{(i)}\right)$$

- Properties:

- SGD exhibits a lot of fluctuation and has a lot of variance in the parameter updates. However, although, SGD can potentially move in the wrong direction due to limited information; in-practice, if we slowly decrease the learning-rate, it shows the same convergence behavior as batch gradient descent, almost certainly converging to a local or the global minimum for non-convex and convex optimization respectively.

- Moreover, the fluctuations it exhibits enables it to jump to new and potentially better local minima.

- How should we handle the lr in this case? Why?

We should reduce the lr after every epoch:

This is due to the fact that the random sampling of batches acts as a source of noise which might make SGD keep oscillating around the minima without actually reaching it. - What conditions guarantee convergence of SGD?

The following conditions guarantee convergence under convexity conditions for SGD:$$\begin{array}{l}{\sum_{k=1}^{\infty} \epsilon_{k}=\infty, \quad \text { and }} \\ {\sum_{k=1}^{\infty} \epsilon_{k}^{2}<\infty}\end{array}$$

- Properties:

- M-BGD:

A hybrid approach that perform updates for a, pre-specified, mini-batch of \(n\) training examples:$$\theta=\theta-\epsilon \cdot \nabla_{\theta} J\left(\theta ; x^{(i : i+n)} ; y^{(i : i+n)}\right)$$

- What advantages does it have?

- Reduce the variance of the parameter updates \(\rightarrow\) more stable convergence

- Makes use of matrix-vector highly optimized libraries

- What advantages does it have?

- Explain the different kinds of gradient-descent optimization procedures:

- Batch Gradient Descent AKA Vanilla Gradient Descent, computes the gradient of the objective wrt. the parameters \(\theta\) for the entire dataset:

$$\theta=\theta-\epsilon \cdot \nabla_{\theta} J(\theta)$$

- SGD performs a parameter update for each data-point:

$$\theta=\theta-\epsilon \cdot \nabla_{\theta} J\left(\theta ; x^{(i)} ; y^{(i)}\right)$$

- Mini-batch Gradient Descent a hybrid approach that perform updates for a, pre-specified, mini-batch of \(n\) training examples:

$$\theta=\theta-\epsilon \cdot \nabla_{\theta} J\left(\theta ; x^{(i : i+n)} ; y^{(i : i+n)}\right)$$

- Batch Gradient Descent AKA Vanilla Gradient Descent, computes the gradient of the objective wrt. the parameters \(\theta\) for the entire dataset:

- State the difference between SGD and GD?

Gradient Descent’s cost-function iterates over ALL training samples.

Stochastic Gradient Descent’s cost-function only accounts for ONE training sample, chosen at random. - When would you use GD over SDG, and vice-versa?

GD theoretically minimizes the error function better than SGD. However, SGD converges much faster once the dataset becomes large.

That means GD is preferable for small datasets while SGD is preferable for larger ones.

-

What is the problem of vanilla approaches to GD?

All the variants described above, however, do not guarantee “good” convergence due to some challenges.- List the challenges that account for the problem above:

- Choosing a proper learning rate is usually difficult:

A learning rate that is too small leads to painfully slow convergence, while a learning rate that is too large can hinder convergence and cause the loss function to fluctuate around the minimum or even to diverge. - Inability to adapt to the specific characteristics of the dataset:

Learning rate schedules try to adjust the learning rate during training by e.g. annealing, i.e. reducing the learning rate according to a pre-defined schedule or when the change in objective between epochs falls below a threshold. These schedules and thresholds, however, have to be defined in advance and are thus unable to adapt to a dataset’s characteristics. - The learning rate is fixed for all parameter updates:

If our data is sparse and our features have very different frequencies, we might not want to update all of them to the same extent, but perform a larger update for rarely occurring features. - Another key challenge of minimizing highly non-convex error functions common for neural networks is avoiding getting trapped in their numerous suboptimal local minima. Dauphin et al. argue that the difficulty arises in fact not from local minima but from saddle points, i.e. points where one dimension slopes up and another slopes down. These saddle points are usually surrounded by a plateau of the same error, which makes it notoriously hard for SGD to escape, as the gradient is close to zero in all dimensions.

- Choosing a proper learning rate is usually difficult:

- List the challenges that account for the problem above:

- List the different strategies for optimizing GD:

- Adapt our updates to the slope of our error function

- Adapt our updates to the importance of each individual parameter

- List the different variants for optimizing GD:

- Adapt our updates to the slope of our error function:

- Momentum

- Nesterov Accelerated Gradient

- Adapt our updates to the importance of each individual parameter:

- Adagrad

- Adadelta

- RMSprop

- Adam

- AdaMax

- Nadam

- AMSGrad

- Adapt our updates to the slope of our error function:

- Momentum:

- Motivation:

SGD has trouble navigating ravines (i.e. areas where the surface curves much more steeply in one dimension than in another) which are common around local optima.

In these scenarios, SGD oscillates across the slopes of the ravine while only making hesitant progress along the bottom towards the local optimum. - Definitions/Algorithm:

Momentum is a method that helps accelerate SGD in the relevant direction and dampens oscillations (image^). It does this by adding a fraction \(\gamma\) of the update vector of the past time step to the current update vector:$$\begin{aligned} v_{t} &=\gamma v_{t-1}+\eta \nabla_{\theta} J(\theta) \\ \theta &=\theta-v_{t} \end{aligned}$$

-

Intuition:

Essentially, when using momentum, we push a ball down a hill. The ball accumulates momentum as it rolls downhill, becoming faster and faster on the way (until it reaches its terminal velocity if there is air resistance, i.e. \(\gamma < 1\)). The same thing happens to our parameter updates: The momentum term increases for dimensions whose gradients point in the same directions and reduces updates for dimensions whose gradients change directions. As a result, we gain faster convergence and reduced oscillation.In this case we think of the equation as:

$$v_{t} =\underbrace{\gamma}_{\text{friction }} \: \underbrace{v_{t-1}}_{\text{velocity}}+\eta \underbrace{\nabla_{\theta} J(\theta)}_ {\text{acceleration}}$$

- Parameter Settings:

The momentum term \(\gamma\) is usually set to \(0.9\) or a similar value, and \(v_0 = 0\).

- Motivation:

- Nesterov Accelerated Gradient (Momentum):

- Motivation:

Momentum is good, however, a ball that rolls down a hill, blindly following the slope, is highly unsatisfactory. We’d like to have a smarter ball, a ball that has a notion of where it is going so that it knows to slow down before the hill slopes up again. - Definitions/Algorithm:

NAG is a way to five our momentum term this kind of prescience. Since we know that we will use the momentum term \(\gamma v_{t-1}\) to move the parameters \(\theta\), we can compute a rough approximation of the next position of the parameters with \(\theta - \gamma v_{t-1}\) (w/o the gradient). This allows us to, effectively, look ahead by calculating the gradient not wrt. our current parameters \(\theta\) but wrt. the approximate future position of our parameters:$$\begin{aligned} v_{t} &=\gamma v_{t-1}+\eta \nabla_{\theta} J\left(\theta-\gamma v_{t-1}\right) \\ \theta &=\theta-v_{t} \end{aligned}$$

- Intuition:

While Momentum first computes the current gradient (small blue vector) and then takes a big jump in the direction of the updated accumulated gradient (big blue vector), NAG first makes a big jump in the direction of the previous accumulated gradient (brown vector), measures the gradient and then makes a correction (red vector), which results in the complete NAG update (green vector). This anticipatory update prevents us from going too fast and results in increased responsiveness, which has significantly increased the performance of RNNs on a number of tasks. - Parameter Settings:

\(\gamma = 0.9\), - Successful Applications:

This really helps the optimization of recurrent neural networks

- Motivation:

- Adagrad

- Motivation:

Now that we are able to adapt our updates to the slope of our error function and speed up SGD in turn, we would also like to adapt our updates to each individual parameter to perform larger or smaller updates depending on their importance. - Definitions/Algorithm:

Adagrad is an algorithm for gradient-based optimization that does just this: It adapts the learning rate to the parameters, performing smaller updates (i.e. low learning rates) for parameters associated with frequently occurring features, and larger updates (i.e. high learning rates) for parameters associated with infrequent features. - Intuition:

Adagrad uses a different learning rate for every parameter \(\theta_i\) at every time step \(t\), so, we first show Adagrad’s per-parameter update.

Adagrad per-parameter update:

The SGD update for every parameter \(\theta_i\) at each time step \(t\) is:$$\theta_{t+1, i}=\theta_{t, i}-\eta \cdot g_{t, i}$$

where \(g_{t, i}=\nabla_{\theta} J\left(\theta_{t, i}\right.\) is the partial derivative of the objective function w.r.t. to the parameter \(\theta_i\) at time step \(t\), and \(g_{t}\) is the gradient at time-step \(t\).

In its update rule, Adagrad modifies the general learning rate \(\eta\) at each time step \(t\) for every parameter \(\theta_i\) based on the past gradients that have been computed for \(\theta_i\):

$$\theta_{t+1, i}=\theta_{t, i}-\frac{\eta}{\sqrt{G_{t, i i}+\epsilon}} \cdot g_{t, i}$$

\(G_t \in \mathbb{R}^{d \times d}\) here is a diagonal matrix where each diagonal element \(i, i\) is the sum of the squares of the gradients wrt \(\theta_i\) up to time step \(t\)[^12], while \(\epsilon\) is a smoothing term that avoids division by zero (\(\approx 1e - 8\)).

As \(G_t\) contains the sum of the squares of the past gradients w.r.t. to all parameters \(\theta\) along its diagonal, we can now vectorize our implementation by performing a matrix-vector product \(\odot\) between \(G_t\) and \(g_t\):

$$\theta_{t+1}=\theta_{t}-\frac{\eta}{\sqrt{G_{t}+\epsilon}} \odot g_{t}$$

- Parameter Settings:

For the lr, Most implementations use a default value of \(0.01\) and leave it at that. - Successful Application:

Well-suited for dealing with sparse data (because it adapts the lr of each parameter wrt feature frequency) - Properties:

- Well-suited for dealing with sparse data (because it adapts the lr of each parameter wrt feature frequency)

- Eliminates need for manual tuning of lr:

One of Adagrad’s main benefits is that it eliminates the need to manually tune the learning rate. Most implementations use a default value of \(0.01\) and leave it at that. - Weakness \(\rightarrow\) Accumulation of the squared gradients in the denominator:

Since every added term is positive, the accumulated sum keeps growing during training. This in turn causes the learning rate to shrink and eventually become infinitesimally small, at which point the algorithm is no longer able to acquire additional knowledge. - Without the sqrt, the algorithm performs much worse

- Motivation:

- Adadelta

- Motivation:

Adagrad has a weakness where it suffers from aggressive, monotonically decreasing lr by accumulation of the squared gradients in the denominator. The following algorithm aims to resolve this flow. - Definitions/Algorithm:

- Intuition:

- Parameter Settings:

We set \(\gamma\) to a similar value as the momentum term, around \(0.9\). - Properties:

- Eliminates need for lr completely:

With Adadelta, we do not even need to set a default learning rate, as it has been eliminated from the update rule.

- Eliminates need for lr completely:

- Motivation:

- RMSprop

- Motivation:

RMSprop and Adadelta have both been developed independently around the same time stemming from the need to resolve Adagrad’s radically diminishing learning rates. - Definitions/Algorithm:

RMSprop is an unpublished, adaptive learning rate method proposed by Geoff Hinton in Lecture 6e of his Coursera Class. - Intuition:

RMSprop in fact is identical to the first update vector of Adadelta that we derived above:$$\begin{align} \begin{split} E[g^2]_t &= 0.9 E[g^2]_{t-1} + 0.1 g^2_t \\ \theta_{t+1} &= \theta_{t} - \dfrac{\eta}{\sqrt{E[g^2]_t + \epsilon}} g_{t} \end{split} \end{align}$$

RMSprop as well divides the learning rate by an exponentially decaying average of squared gradients.

- Parameter Settings:

Hinton suggests \(\gamma\) to be set to \(0.9\), while a good default value for the learning rate \(\eta\) is \(0.001\). - Properties:

Works well with RNNs.

- Motivation:

- Adam

- Motivation:

Adding momentum to Adadelta/RMSprop. - Definitions/Algorithm:

Adaptive Moment Estimation (Adam) is another method that computes adaptive learning rates for each parameter. In addition to storing an exponentially decaying average of past squared gradients \(v_t\) like Adadelta and RMSprop, Adam also keeps an exponentially decaying average of past gradients \(m_t\), similar to momentum. - Intuition:

Adam VS Momentum:

Whereas momentum can be seen as a ball running down a slope, Adam behaves like a heavy ball with friction, which thus prefers flat minima in the error surface. - Parameter Settings:

The authors propose default values of \(0.9\) for \(\beta_1\), \(0.999\) for \(\beta_2\), and \(10^{ −8}\) for \(\epsilon\). - Properties:

- They show empirically that Adam works well in practice and compares favorably to other adaptive learning-method algorithms.

- Kingma et al. show that its bias-correction helps Adam slightly outperform RMSprop towards the end of optimization as gradients become sparser. Insofar, Adam might be the best overall choice.

- Motivation:

- Which methods have trouble with saddle points?

SGD, Momentum, and NAG find it difficulty to break symmetry, although the two latter eventually manage to escape the saddle point, while Adagrad, RMSprop, and Adadelta quickly head down the negative slope. - How should you choose your optimizer?

- As we can see, the adaptive learning-rate methods, i.e. Adagrad, Adadelta, RMSprop, and Adam are most suitable and provide the best convergence for these scenarios.

- Sparse Input Data:

If your input data is sparse, then you likely achieve the best results using one of the adaptive learning-rate methods. An additional benefit is that you won’t need to tune the learning rate but likely achieve the best results with the default value.

- Summarize the different variants listed above. How do they compare to each other?

In summary, RMSprop is an extension of Adagrad that deals with its radically diminishing learning rates. It is identical to Adadelta, except that Adadelta uses the RMS of parameter updates in the numerator update rule. Adam, finally, adds bias-correction and momentum to RMSprop. Insofar, RMSprop, Adadelta, and Adam are very similar algorithms that do well in similar circumstances. - What’s a common choice in many research papers?

Interestingly, many recent papers use vanilla SGD without momentum and a simple learning rate annealing schedule. - List additional strategies for optimizing SGD:

- Shuffling and Curriculum Learning

- Batch Normalization

- Early Stopping

- Gradient Noise

Maximum Margin Classifiers

- Define Margin Classifiers:

A margin classifier is a classifier which is able to give an associated distance from the decision boundary for each example. - What is a Margin for a linear classifier?

The margin of a linear classifier is the distance from the decision boundary to the nearest sample point. -

Give the motivation for margin classifiers:

Non-margin classifiers (e.g. Centroid, Perceptron, LR) will converge to a correct classifier on linearly separable data; however, the classifier they converge to is not unique nor the best. - Define the notion of the “best” possible classifier

We assume that if we can maximize the distance between the data points to be classified and the hyperplane that classifies them, then we have reached a boundary that allows for the “best-fit”, i.e. allows for the most room for error. - How can we achieve the “best” classifier?

We enforce a constraint that achieves a classifier that has a maximum-margin. - What unique vector is orthogonal to the hp? Prove it:

The weight vector \(\mathbf{w}\) is orthogonal to the separating-plane/decision-boundary, defined by \(\mathbf{w}^T\mathbf{x} + b = 0\), in the \(\mathcal{X}\) space; Reason:

Since if you take any two points \(\mathbf{x}^\prime\) and \(\mathbf{x}^{\prime \prime}\) on the plane, and create the vector \(\left(\mathbf{x}^{\prime}-\mathbf{x}^{\prime \prime}\right)\) parallel to the plane by subtracting the two points, then the following equations must hold:$$\mathbf{w}^{\top} \mathbf{x}^{\prime}+b=0 \wedge \mathbf{w}^{\top} \mathbf{x}^{\prime \prime}+b=0 \implies \mathbf{w}^{\top}\left(\mathbf{x}^{\prime}-\mathbf{x}^{\prime \prime}\right)=0$$

- What do we mean by “signed distance”? Derive its formula:

The signed distance is the minimum distance from a point to a hyperplane. We solve for the signed distance to achieve the following formula for it: \(d = \dfrac{\| w \cdot x_0 + b \|}{\|w\|},\)

where we have an n-dimensional hyperplane: \(w \cdot x + b = 0\) and a point \(\mathbf{x}_ n\).

Derivation:- Suppose we have an affine hyperplane defined by \(w \cdot x + b\) and a point \(\mathbf{x}_ n\).

- Suppose that \(\mathbf{x} \in \mathbf{R}^n\) is a point satisfying \(w \cdot \mathbf{x} + b = 0\), i.e. it is a point on the plane.

- We construct the vector \(\mathbf{x}_ n−\mathbf{x}\) which points from \(\mathbf{x}\) to \(\mathbf{x}_ n\), and then, project (scalar projection==signed distance) it onto the unique vector perpendicular to the plane, i.e. \(w\),

$$d=| \text{comp}_{w} (\mathbf{x}_ n-\mathbf{x})| = \left| \frac{(\mathbf{x}_ n-\mathbf{x})\cdot w}{\|w\|} \right| = \frac{|\mathbf{x}_ n \cdot w - \mathbf{x} \cdot w|}{\|w\|}.$$

- Since \(\mathbf{x}\) is a vector on the plane, it must satisfy \(w\cdot \mathbf{x}=-b\) so we get

$$d=| \text{comp}_{w} (\mathbf{x}_ n-\mathbf{x})| = \frac{|\mathbf{x}_ n \cdot w +b|}{\|w\|}$$

Thus, we conclude that if \(\|w\| = 1\) then the signed distance from a datapoint \(X_i\) to the hyperplane is \(\|wX_i + b\|\).

- Given the formula for signed distance, calculate the “distance of the point closest to the hyperplane”:

Let \(X_n\) be the point that is closest to the plane, and let \(\hat{w} = \dfrac{w}{\|w\|}\).

Take any point \(X\) on the plane, and let \(\vec{v}\) be the vector \(\vec{v} = X_n - X\).

Now, the distance, \(d\) is equal to$$\begin{align} d & \ = \vert\hat{w}^{\top}\vec{v}\vert \\ & \ = \vert\hat{w}^{\top}(X_n - X)\vert \\ & \ = \vert\hat{w}^{\top}X_n - \hat{w}^{\top}X)\vert \\ & \ = \dfrac{1}{\|w\|}\vert w^{\top}X_n + b - w^{\top}X) - b\vert , & \text{we add and subtract the bias } b\\ & \ = \dfrac{1}{\|w\|}\vert (w^{\top}X_n + b) - (w^{\top}X + b)\vert \\ & \ = \dfrac{1}{\|w\|}\vert (w^{\top}X_n + b) - (0)\vert , & \text{from the eq. of the plane on a point on the plane} \\ & \ = \dfrac{\vert (w^{\top}X_n + b)\vert}{\|w\|} \end{align} $$

- Use geometric properties of the hp to Simplify the expression for the distance of the closest point to the hp, above

First, we notice that for any given plane \(w^Tx = 0\), the equations, \(\gamma * w^Tx = 0\), where \(\gamma \in \mathbf{R}\) is a scalar, basically characterize the same plane and not many planes.

This is because \(w^Tx = 0 \iff \gamma * w^Tx = \gamma * 0 \iff \gamma * w^Tx = 0\).

The above implies that any model that takes input \(w\) and produces a margin, will have to be Scale Invariant.

To get around this and simplify the analysis, I am going to consider all the representations of the same plane, and I am going to pick one where we normalize (re-scale) the weight \(w\) such that the signed distance (distance to the point closest to the margin) is equal to one:$$|w^Tx_n| > 0 \rightarrow |w^Tx_n| = 1$$

, where \(x_n\) is the point closest to the plane.

We constraint the hyperplane by normalizing \(w\) to this equation \(|w^Tx_i| = 1\) or with added bias, \(|w^Tx_i + b| = 1\).

\(\implies\)$$\begin{align} d & \ = \dfrac{\vert (w^{\top}X_n + b)\vert}{\|w\|} \\ & \ = \dfrac{\vert (1)\vert}{\|w\|} , & \text{from the constraint on the distance of the closest point} \\ & \ = \dfrac{1}{\|w\|} \end{align} $$

- Characterize the margin, mathematically:

we can characterize the margin, with its size, as the distance, \(\frac{1}{\|\mathbf{w}\|}\), between the hyperplane/boundary and the closest point to the plane \(\mathbf{x}_ n\), in both directions (multiply by 2) \(= \frac{2}{\|\mathbf{w}\|}\) ; given the condition we specified earlier \(\left|\mathbf{w}^{\top} \mathbf{x}_ {n} + b\right|=1\) for the closest point \(\mathbf{x}_ n\). - Characterize the “Slab Existence”:

The analysis done above allows us to conclusively prove that there exists a slab of width \(\dfrac{2}{\|w\|}\) containing no sample points where the hyperplane runs through (bisects) its center. - Formulate the optimization problem of maximizing the margin wrt analysis above:

We formulate the optimization problem of maximizing the margin by maximizing the distance, subject to the condition on how we derived the distance:$$\max_{\mathbf{w}} \dfrac{2}{\|\mathbf{w}\|} \:\:\: : \:\: \min _{n=1,2, \ldots, N}\left|\mathbf{w}^{\top} \mathbf{x}_{n}+b\right|=1$$

- Reformulate the optimization problem above to a more “friendly” version (wrt optimization -> put in standard form):

We can reformulate by (1) Flipping and Minimizing, (2) Taking a square since it’s monotonic and convex, and (3) noticing that \(\left|\mathbf{w}^{T} \mathbf{x}_ {n}+b\right|=y_{n}\left(\mathbf{w}^{T} \mathbf{x}_ {n}+b\right)\) (since the signal and label must agree, their product will always be positive) and the \(\min\) operator can be replaced by ensuring that for all the points the condition \(y_{n}\left(\mathbf{w}^{\top} \mathbf{x}_ {n}+b\right) \geq 1\) holds proof (by contradiction) as:$$\min_w \dfrac{1}{2} \mathbf{w}^T\mathbf{w} \:\:\: : \:\: y_{n}\left(\mathbf{w}^{\top} \mathbf{x}_ {n}+b\right) \geq 1 \:\: \forall i \in [1,N]$$

Now when we solve the “friendly” equation above, we will get the separating plane with the best possible margin (best=biggest).

- Give the final (standard) formulation of the “Optimization problem for maximum margin classifiers”:

$$\min_w \dfrac{1}{2} \mathbf{w}^T\mathbf{w} \:\:\: : \:\: y_{n}\left(\mathbf{w}^{\top} \mathbf{x}_ {n}+b\right) \geq 1 \:\: \forall i \in [1,N]$$

- What kind of formulation is it (wrt optimization)? What are the parameters?

The above problem is a Quadratic Program, in \(d + 1\)-dimensions and \(n\)-constraints, in standard form.

- Give the final (standard) formulation of the “Optimization problem for maximum margin classifiers”:

Hard-Margin SVMs

- Define:

- SVMs:

1.*Support Vector Machines** (SVMs) are supervised learning models with associated learning algorithms that analyze data used for classification and regression analysis.

The SVM is a Maximum Margin Classifier that aims to find the “maximum-margin hyperplane” that divides the group of points \({\displaystyle {\vec {x}}_{i}} {\vec {x}}_{i}\) for which \({\displaystyle y_{i}=1}\) from the group of points for which \({\displaystyle y_{i}=-1}\). - Support Vectors:

1.*Support Vectors** are the data-points that lie exactly on the margin (i.e. on the boundary of the slab).

They satisfy \(\|w^TX' + b\| = 1, \forall\) support vectors \(X'\) - Hard-Margin SVM:

The Hard-Margin SVM is just a maximum-margin classifier with features and kernels (discussed later).

- SVMs:

- Define the following wrt hard-margin SVM:

- Goal:

Find weights ‘\(w\)’ and scalar ‘\(b\)’ that correctly classifies the data-points and, moreover, does so in the “best” possible way. - Procedure:

(1) Use a linear classifier (2) But, Maximize the Margin (3) Do so by Minimizing \(\|w\|\) - Decision Function:

$${\displaystyle f(x)={\begin{cases}1&{\text{if }}\ w\cdot X_i+\alpha>0\\0&{\text{otherwise}}\end{cases}}}$$

- Constraints:

$$y_i(wX_i + b) \geq 1, \forall i \in [1,n]$$

- The Optimization Problem:

Find weights ‘\(w\)’ and scalar ‘\(b\)’ that minimize$$ \dfrac{1}{2} w^Tw$$

Subject to

$$y_i(wX_i + b) \geq 1, \forall i \in [1,n]$$

Formally,

$$\min_w \dfrac{1}{2}w^Tw \:\:\: : \:\: y_i(wX_i + b) \geq 1, \forall i \in [1,n]$$

- The Optimization Method:

The SVM optimization problem reduces to a Quadratic Program.

- Goal:

- Elaborate on the generalization analysis:

We notice that, geometrically, the hyperplane (the maximum margin classifier) is completely characterized by the support vectors (the vectors that lie on the margin).

A very important conclusion arises.

The maximum margin classifier (SVM) depends only on the number of support vectors and not on the dimension of the problem.

This implies that the computation doesn’t scale up with the dimension and, also implies, that the kernel trick works very well. - List the properties:

- In addition to performing linear classification, SVMs can efficiently perform a non-linear classification using what is called the kernel trick, implicitly mapping their inputs into high-dimensional feature spaces.

- The hyperplane is determined solely by its support vectors.

- The SVM always converges on linearly separable data.

- The Hard-Margin SVM fails if the data is not linearly separable.

- The Hard-Margin SVM is quite sensitive to outliers

- Give the solution to the optimization problem for H-M SVM:

To solve the above problem, we need something that deals with inequality constraints; thus, we use the KKT method for solving a Lagrnagian under inequality constraints.

The Lagrange Formulation:- Formulate the Lagrangian:

- Take each inequality constraint and put them in the zero-form (equality with Zero)

- Multiply each inequality by a Lagrange Multiplier \(\alpha_n\)

- Add them to the objective function \(\min_w \dfrac{1}{2} \mathbf{w}^T\mathbf{w}\)

The sign will be \(-\) (negative) simply because the inequality is \(\geq 0\)

$$\min_{w, b} \max_{\alpha_n} \mathcal{L}(\mathbf{w}, b, \boldsymbol{\alpha}) = \dfrac{1}{2} \mathbf{w}^T\mathbf{w} -\sum_{n=1}^{N} \alpha_{n}\left(y_{n}\left(\mathbf{w}^{\top} \mathbf{x}_ {n}+b\right)-1\right) \:\:\: : \:\: \alpha_n \geq 0$$

- Optimize the objective independently, for each of the unconstrained variables:

- Gradient w.r.t. \(\mathbf{w}\):

$$\nabla_{\mathrm{w}} \mathcal{L}=\mathrm{w}-\sum_{n=1}^{N} \alpha_{n} y_{n} \mathrm{x}_ {n}=0 \\ \implies \\ \mathbf{w}=\sum_{n=1}^{N} \alpha_{n} y_{n} \mathbf{x}_ {n}$$

- Derivative w.r.t. \(b\):

$$\frac{\partial \mathcal{L}}{\partial b}=-\sum_{n=1}^{N} \alpha_{n} y_{n}=0 \\ \implies \\ \sum_{n=1}^{N} \alpha_{n} y_{n}=0$$

- Gradient w.r.t. \(\mathbf{w}\):

- Get the Dual Formulation w.r.t. the (tricky) constrained variable \(\alpha_n\):

- Substitute with the above conditions in the original lagrangian (such that the optimization w.r.t. \(\alpha_n\) will become free of \(\mathbf{w}\) and \(b\):

$$\mathcal{L}(\boldsymbol{\alpha})=\sum_{n=1}^{N} \alpha_{n}-\frac{1}{2} \sum_{n=1}^{N} \sum_{m=1}^{N} y_{n} y_{m} \alpha_{n} \alpha_{m} \mathbf{x}_{n}^{\mathrm{T}} \mathbf{x}_{m}$$

- Notice that the first constraint \(\mathbf{w}=\sum_{n=1}^{N} \alpha_{n} y_{n} \mathbf{x}_ {n}\) has-no-effect/doesn’t-constraint \(\alpha_n\) so it’s a vacuous constraint. However, not the second constraint \(\sum_{n=1}^{N} \alpha_{n} y_{n}=0\).

- Set the optimization objective and the constraints, a quadratic function in \(\alpha_n\):

$$\max_{\alpha} \mathcal{L}(\boldsymbol{\alpha})=\sum_{n=1}^{N} \alpha_{n}-\frac{1}{2} \sum_{n=1}^{N} \sum_{m=1}^{N} y_{n} y_{m} \alpha_{n} \alpha_{m} \mathbf{x}_{n}^{\mathrm{T}} \mathbf{x}_{m} \\ \:\:\:\:\:\:\:\:\:\: : \:\: \alpha_n \geq 0 \:\: \forall \: n= 1, \ldots, N \:\: \wedge \:\: \sum_{n=1}^{N} \alpha_{n} y_{n}=0$$

- Substitute with the above conditions in the original lagrangian (such that the optimization w.r.t. \(\alpha_n\) will become free of \(\mathbf{w}\) and \(b\):

- Set the problem as a Quadratic Programming problem:

- Change the maximization to minimization by flipping the signs:

$$\min _{\alpha} \frac{1}{2} \sum_{n=1}^{N} \sum_{m=1}^{N} y_{n} y_{m} \alpha_{n} \alpha_{m} \mathbf{x}_{0}^{\mathrm{T}} \mathbf{x}_{m}-\sum_{n=1}^{N} \alpha_{n}$$

- Isolate the Coefficients from the \(\alpha_n\)s and set in matrix-form:

$$\min _{\alpha} \frac{1}{2} \alpha^{\top} \underbrace{\begin{bmatrix} y_{1} y_{1} \mathbf{x}_{1}^{\top} \mathbf{x}_{1} & y_{1} y_{2} \mathbf{x}_{1}^{\top} \mathbf{x}_{2} & \ldots & y_{1} y_{N} \mathbf{x}_{1}^{\top} \mathbf{x}_{N} \\ y_{2} y_{1} \mathbf{x}_{2}^{\top} \mathbf{x}_{1} & y_{2} y_{2} \mathbf{x}_{2}^{\top} \mathbf{x}_{2} & \ldots & y_{2} y_{N} \mathbf{x}_{2}^{\top} \mathbf{x}_{N} \\ \ldots & \ldots & \ldots & \ldots \\ y_{N} y_{1} \mathbf{x}_{N}^{\top} \mathbf{x}_{1} & y_{N} y_{2} \mathbf{x}_{N}^{\top} \mathbf{x}_{2} & \ldots & y_{N} y_{N} \mathbf{x}_{N}^{\top} \mathbf{x}_{N} \end{bmatrix}}_{\text{quadratic coefficients}} \alpha+\underbrace{\left(-1^{\top}\right)}_ {\text {linear }} \alpha \\ \:\:\:\:\:\:\:\:\:\: : \:\: \underbrace{\mathbf{y}^{\top} \boldsymbol{\alpha}=0}_{\text {linear constraint }} \:\: \wedge \:\: \underbrace{0}_{\text {lower bounds }} \leq \alpha \leq \underbrace{\infty}_{\text {upper bounds }} $$

The Quadratic Programming Package asks you for the Quadratic Term (Matrix) and the Linear Term, and for the Linear Constraint and the Range of \(\alpha_n\)s; and then, gives you back an \(\mathbf{\alpha}\).

Equivalently:

$$\min _{\alpha} \frac{1}{2} \boldsymbol{\alpha}^{\mathrm{T}} \mathrm{Q} \boldsymbol{\alpha}-\mathbf{1}^{\mathrm{T}} \boldsymbol{\alpha} \quad \text {subject to } \quad \mathbf{y}^{\mathrm{T}} \boldsymbol{\alpha}=0 ; \quad \boldsymbol{\alpha} \geq \mathbf{0}$$

- Change the maximization to minimization by flipping the signs:

- What method does it require to be solved:

To solve the above problem, we need something that deals with inequality constraints; thus, we use the KKT method for solving a Lagrnagian under inequality constraints. - Formulate the Lagrangian:

- Take each inequality constraint and put them in the zero-form (equality with Zero)

- Multiply each inequality by a Lagrange Multiplier \(\alpha_n\)

- Add them to the objective function \(\min_w \dfrac{1}{2} \mathbf{w}^T\mathbf{w}\)

The sign will be \(-\) (negative) simply because the inequality is \(\geq 0\)

$$\min_{w, b} \max_{\alpha_n} \mathcal{L}(\mathbf{w}, b, \boldsymbol{\alpha}) = \dfrac{1}{2} \mathbf{w}^T\mathbf{w} -\sum_{n=1}^{N} \alpha_{n}\left(y_{n}\left(\mathbf{w}^{\top} \mathbf{x}_ {n}+b\right)-1\right) \:\:\: : \:\: \alpha_n \geq 0$$

- Optimize the objective for each variable:

- Gradient w.r.t. \(\mathbf{w}\):

$$\nabla_{\mathrm{w}} \mathcal{L}=\mathrm{w}-\sum_{n=1}^{N} \alpha_{n} y_{n} \mathrm{x}_ {n}=0 \\ \implies \\ \mathbf{w}=\sum_{n=1}^{N} \alpha_{n} y_{n} \mathbf{x}_ {n}$$

- Derivative w.r.t. \(b\):

$$\frac{\partial \mathcal{L}}{\partial b}=-\sum_{n=1}^{N} \alpha_{n} y_{n}=0 \\ \implies \\ \sum_{n=1}^{N} \alpha_{n} y_{n}=0$$

- Gradient w.r.t. \(\mathbf{w}\):

- Get the Dual Formulation w.r.t. the (tricky) constrained variable \(\alpha_n\):

- Substitute with the above conditions in the original lagrangian (such that the optimization w.r.t. \(\alpha_n\) will become free of \(\mathbf{w}\) and \(b\):

$$\mathcal{L}(\boldsymbol{\alpha})=\sum_{n=1}^{N} \alpha_{n}-\frac{1}{2} \sum_{n=1}^{N} \sum_{m=1}^{N} y_{n} y_{m} \alpha_{n} \alpha_{m} \mathbf{x}_{n}^{\mathrm{T}} \mathbf{x}_{m}$$

- Notice that the first constraint \(\mathbf{w}=\sum_{n=1}^{N} \alpha_{n} y_{n} \mathbf{x}_ {n}\) has-no-effect/doesn’t-constraint \(\alpha_n\) so it’s a vacuous constraint. However, not the second constraint \(\sum_{n=1}^{N} \alpha_{n} y_{n}=0\).

- Set the optimization objective and the constraints, a quadratic function in \(\alpha_n\):

$$\max_{\alpha} \mathcal{L}(\boldsymbol{\alpha})=\sum_{n=1}^{N} \alpha_{n}-\frac{1}{2} \sum_{n=1}^{N} \sum_{m=1}^{N} y_{n} y_{m} \alpha_{n} \alpha_{m} \mathbf{x}_{n}^{\mathrm{T}} \mathbf{x}_{m} \\ \:\:\:\:\:\:\:\:\:\: : \:\: \alpha_n \geq 0 \:\: \forall \: n= 1, \ldots, N \:\: \wedge \:\: \sum_{n=1}^{N} \alpha_{n} y_{n}=0$$

- Substitute with the above conditions in the original lagrangian (such that the optimization w.r.t. \(\alpha_n\) will become free of \(\mathbf{w}\) and \(b\):

- Set the problem as a Quadratic Programming problem:

- Change the maximization to minimization by flipping the signs:

$$\min _{\alpha} \frac{1}{2} \sum_{n=1}^{N} \sum_{m=1}^{N} y_{n} y_{m} \alpha_{n} \alpha_{m} \mathbf{x}_{0}^{\mathrm{T}} \mathbf{x}_{m}-\sum_{n=1}^{N} \alpha_{n}$$

- Isolate the Coefficients from the \(\alpha_n\)s and set in matrix-form:

$$\min _{\alpha} \frac{1}{2} \alpha^{\top} \underbrace{\begin{bmatrix} y_{1} y_{1} \mathbf{x}_{1}^{\top} \mathbf{x}_{1} & y_{1} y_{2} \mathbf{x}_{1}^{\top} \mathbf{x}_{2} & \ldots & y_{1} y_{N} \mathbf{x}_{1}^{\top} \mathbf{x}_{N} \\ y_{2} y_{1} \mathbf{x}_{2}^{\top} \mathbf{x}_{1} & y_{2} y_{2} \mathbf{x}_{2}^{\top} \mathbf{x}_{2} & \ldots & y_{2} y_{N} \mathbf{x}_{2}^{\top} \mathbf{x}_{N} \\ \ldots & \ldots & \ldots & \ldots \\ y_{N} y_{1} \mathbf{x}_{N}^{\top} \mathbf{x}_{1} & y_{N} y_{2} \mathbf{x}_{N}^{\top} \mathbf{x}_{2} & \ldots & y_{N} y_{N} \mathbf{x}_{N}^{\top} \mathbf{x}_{N} \end{bmatrix}}_{\text{quadratic coefficients}} \alpha+\underbrace{\left(-1^{\top}\right)}_ {\text {linear }} \alpha \\ \:\:\:\:\:\:\:\:\:\: : \:\: \underbrace{\mathbf{y}^{\top} \boldsymbol{\alpha}=0}_{\text {linear constraint }} \:\: \wedge \:\: \underbrace{0}_{\text {lower bounds }} \leq \alpha \leq \underbrace{\infty}_ {\text {upper bounds }} $$

- Change the maximization to minimization by flipping the signs:

- What are the inputs and outputs to the Quadratic Program Package?

The Quadratic Programming Package asks you for the Quadratic Term (Matrix) and the Linear Term, and for the Linear Constraint and the Range of \(\alpha_n\)s; and then, gives you back an \(\mathbf{\alpha}\). - Give the final form of the optimization problem in standard form:

$$\min_{\alpha} \frac{1}{2} \boldsymbol{\alpha}^{\mathrm{T}} \mathrm{Q} \boldsymbol{\alpha}-\mathbf{1}^{\mathrm{T}} \boldsymbol{\alpha} \quad \text {subject to } \quad \mathbf{y}^{\mathrm{T}} \boldsymbol{\alpha}=0 ; \quad \boldsymbol{\alpha} \geq \mathbf{0}$$

- Formulate the Lagrangian:

Soft-Margin SVM

- Motivate the soft-margin SVM:

The Hard-Margin SVM faces a few issues:- The Hard-Margin SVM fails if the data is not linearly separable.

- The Hard-Margin SVM is quite sensitive to outliers

The Soft-Margin SVM aims to fix/reconcile these problems.

- What is the main idea behind it?

Allow some points to violate the margin, by introducing slack variables. - Define the following wrt soft-margin SVM:

- Goal:

Find weights ‘\(w\)’ and scalar ‘\(b\)’ that correctly classifies the data-points and, moreover, does so in the “best” possible way, but allow some points to violate the margin, by introducing slack variables. - Procedure:

(1) Use a linear classifier

(2) But, Maximize the Margin

(3) Do so by Minimizing \(\|w\|\)

(4) But allow some points to penetrate the margin - Decision Function:

- Constraints:

$$y_i(wX_i + b) \geq 1 - \zeta_i, \forall i \in [1,n]$$

where the \(\zeta_i\)s are slack variables.

We, also, enforce the non-negativity constraint on the slack variables:$$\zeta_i \geq 0, \:\:\: \forall i \in [1, n]$$

- Why is there a non-negativity constraint?

The non-negativity constraint forces the slack variables to be zero for all points that do not violate the original constraint:

i.e. are not inside the slab.

- Why is there a non-negativity constraint?

- Objective/Cost Function:

$$ R(w) = \dfrac{1}{2} w^Tw + C \sum_{i=1}^n \zeta_i$$

- The Optimization Problem:

Find weights ‘\(w\)’, scalar ‘\(b\)’, and \(\zeta_i\)s that minimize$$ \dfrac{1}{2} w^Tw + C \sum_{i=1}^n \zeta_i$$

Subject to

$$y_i(wX_i + b) \geq 1 - \zeta_i, \zeta_i \geq 0, \forall i \in [1,n]$$

Formally,

$$\min_w \dfrac{1}{2}w^Tw \:\:\: : \:\: y_i(wX_i + b) \geq 1 - \zeta_i, \:\: \zeta_i \geq 0, \forall i \in [1,n]$$

- The Optimization Method:

The SVM optimization problem reduces to a Quadratic Program in \(d + n + 1\)-dimensions and \(2n\)-constraints. - Properties:

- The Soft-Margin SVM will converge on non-linearly separable data.

- Goal:

-

Specify the effects of the regularization hyperparameter \(C\):

Small C Large C Desire Maximizing Margin = \(\dfrac{1}{|w|}\) keep most slack variables zero or small Danger underfitting (High Misclassification) overfitting (awesome training, awful test) outliers less sensitive very sensitive boundary more “flat” more sinuous - Describe the effect wrt over/under fitting:

Increase \(C\) hparam in SVM = causes overfitting

- Describe the effect wrt over/under fitting:

- How do we choose \(C\)?

We choose ‘\(C\)’ with cross-validation. - Give an equivalent formulation in the standard form objective for function estimation (what should it minimize?)

In function estimation we prefer the standard-form objective to minimize (and trade-off); the loss + penalty form.

We introduce a loss function to moderate the use of the slack variables (i.e. to avoid abusing the slack variables), namely, Hinge Loss:$${\displaystyle \max \left(0, 1-y_{i}({\vec {w}}\cdot {\vec {x}}_ {i}-b)\right)}$$

The motivation: We motivate it by comparing it to the traditional \(0-1\) Loss function.

Notice that the \(0-1\) loss is actually non-convex. It has an infinite slope at \(0\). On the other hand, the hinge loss is actually convex.Analysis wrt maximum margin:

This function is zero if the constraint, \(y_{i}({\vec {w}}\cdot {\vec {x}}_ {i}-b)\geq 1\), is satisfied, in other words, if \({\displaystyle {\vec {x}}_{i}} {\vec {x}}_{i}\) lies on the correct side of the margin.

For data on the wrong side of the margin, the function’s value is proportional to the distance from the margin.Modified Objective Function:

$$R(w) = \dfrac{\lambda}{2} w^Tw + \sum_{i=1}^n {\displaystyle \max \left(0, 1-y_{i}({\vec {w}}\cdot {\vec {x}}_ {i}-b)\right)}$$

Proof of equivalence:

$$\begin{align} y_if\left(x_i\right) & \ \geq 1-\zeta_i, & \text{from 1st constraint } \\ \implies \zeta_i & \ \geq 1-y_if\left(x_i\right) \\ \zeta_i & \ \geq 1-y_if\left(x_i\right) \geq 0, & \text{from 2nd positivity constraint on} \zeta_i \\ \iff \zeta_i & \ \geq \max \{0, 1-y_if\left(x_i\right)\} \\ \zeta_i & \ = \max \{0, 1-y_if\left(x_i\right)\}, & \text{minimizing means } \zeta_i \text{reach lower bound}\\ \implies R(w) & \ = \dfrac{\lambda}{2} w^Tw + \sum_{i=1}^n {\displaystyle \max \left(0, 1-y_{i}({\vec {w}}\cdot {\vec {x}}_ {i}-b)\right)}, & \text{plugging in and multplying } \lambda = \dfrac{1}{C} \end{align}$$

The (reformulated) Optimization Problem:

$$\min_{w, b}\dfrac{\lambda}{2} w^Tw + \sum_{i=1}^n {\displaystyle \max \left(0, 1-y_{i}({\vec {w}}\cdot {\vec {x}}_ {i}-b)\right)}$$

Loss Functions

- Define:

-

Loss Functions - Abstractly and Mathematically:

Abstractly, a loss function or cost function is a function that maps an event or values of one or more variables onto a real number, intuitively, representing some “cost” associated with the event.Formally, a loss function is a function \(L :(\hat{y}, y) \in \mathbb{R} \times Y \longmapsto L(\hat{y}, y) \in \mathbb{R}\) that takes as inputs the predicted value \(\hat{y}\) corresponding to the real data value \(y\) and outputs how different they are.

- Distance-Based Loss Functions:

A Loss function \(L(\hat{y}, y)\) is called distance-based if it:- Only depends on the residual:

$$L(\hat{y}, y) = \psi(y-\hat{y}) \:\: \text{for some } \psi : \mathbb{R} \longmapsto \mathbb{R}$$

- Loss is \(0\) when residual is \(0\):

$$\psi(0) = 0$$

- What are they used for?

Regression. - Describe an important property of dist-based losses:

Translation Invariance:

Distance-based losses are translation-invariant:$$L(\hat{y}+a, y+a) = L(\hat{y}, y)$$

- Only depends on the residual:

- Relative Error - What does it lack?

Relative-Error \(\dfrac{\hat{y}-y}{y}\) is a more natural loss but it is NOT translation-invariant.

-

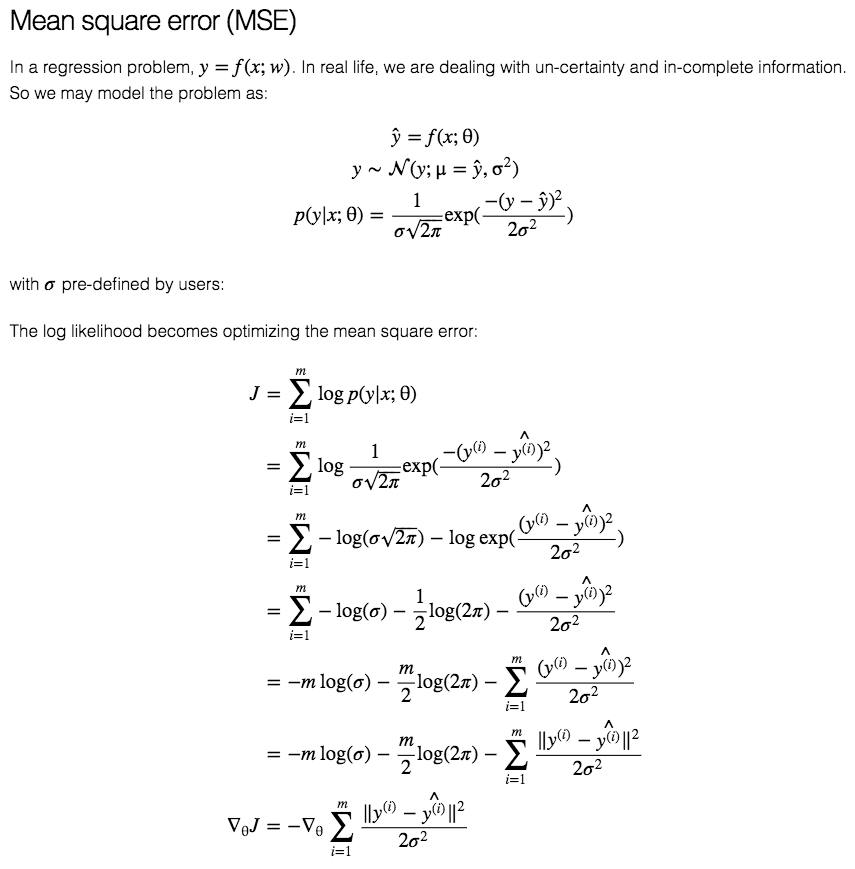

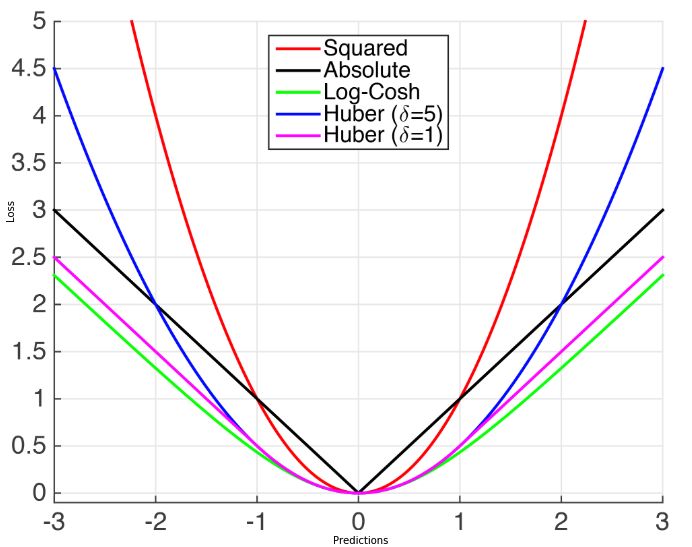

- List 3 Regression Loss Functions

- MSE

- MAE

- Huber

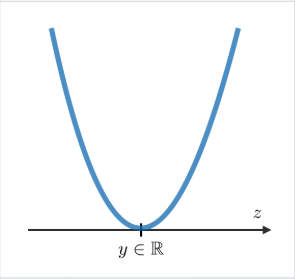

- MSE

- What does it minimize:

The MSE minimizes the sum of squared differences between the predicted values and the target values. - Formula:

$$L(\hat{y}, y) = \dfrac{1}{n} \sum_{i=1}^{n}\left(y_{i}-\hat{y}_ {i}\right)^{2}$$

- Graph:

- Derivation:

- What does it minimize:

- MAE

- What does it minimize:

The MAE minimizes the sum of absolute differences between the predicted values and the target values. - Formula:

$$L(\hat{y}, y) = \dfrac{1}{n} \sum_{i=1}^{n}\vert y_{i}-\hat{y}_ {i}\vert$$

- Graph:

- Derivation:

- List properties:

- Solution may be Non-unique

- Robustness to outliers

- Unstable Solutions:

The instability property of the method of least absolute deviations means that, for a small horizontal adjustment of a datum, the regression line may jump a large amount. The method has continuous solutions for some data configurations; however, by moving a datum a small amount, one could “jump past” a configuration which has multiple solutions that span a region. After passing this region of solutions, the least absolute deviations line has a slope that may differ greatly from that of the previous line. In contrast, the least squares solutions is stable in that, for any small adjustment of a data point, the regression line will always move only slightly; that is, the regression parameters are continuous functions of the data. - Data-points “Latching” ref:

- Unique Solution:

If there are \(k\) features (including the constant), then at least one optimal regression surface will pass through \(k\) of the data points; unless there are multiple solutions. - Multiple Solutions:

The region of valid least absolute deviations solutions will be bounded by at least \(k\) lines, each of which passes through at least \(k\) data points.

- Unique Solution:

- What does it minimize:

- Huber Loss

- AKA:

Smooth Mean Absolute Error - What does it minimize:

- Formula:

$$L(\hat{y}, y) = \left\{\begin{array}{cc}{\frac{1}{2}(y-\hat{y})^{2}} & {\text {if }|(y-\hat{y})|<\delta} \\ {\delta(y-\hat{y})-\frac{1}{2} \delta} & {\text {otherwise }}\end{array}\right.$$

- Graph:

- List properties:

- It’s less sensitive to outliers than the MSE as it treats error as square only inside an interval.

- AKA:

-

Analyze MSE vs MAE ref:

| MSE | MAE | | Sensitive to outliers | Robust to outliers | | Differentiable Everywhere | Non-Differentiable at \(0\) | | Stable Solutions | Unstable Solutions | | Unique Solution | Possibly multiple solutions |- Statistical Efficiency:

- “For normal observations MSE is about \(12\%\) more efficient than MAE” - Fisher

- \(1\%\) Error is enough to make MAE more efficient

- 2/1000 bad observations, make the median more efficient than the mean

- Subgradient methods are slower than gradient descent

- you get a lot better convergence rate guarantees for MSE

- Statistical Efficiency:

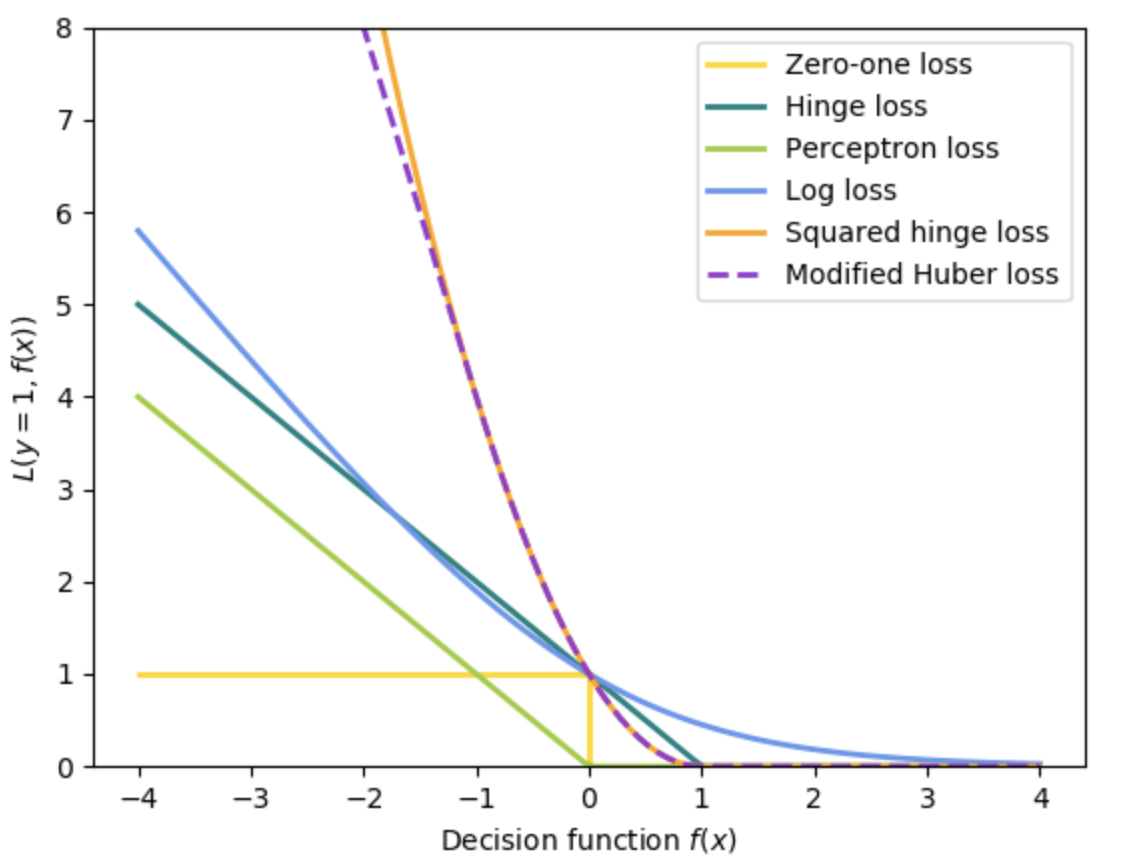

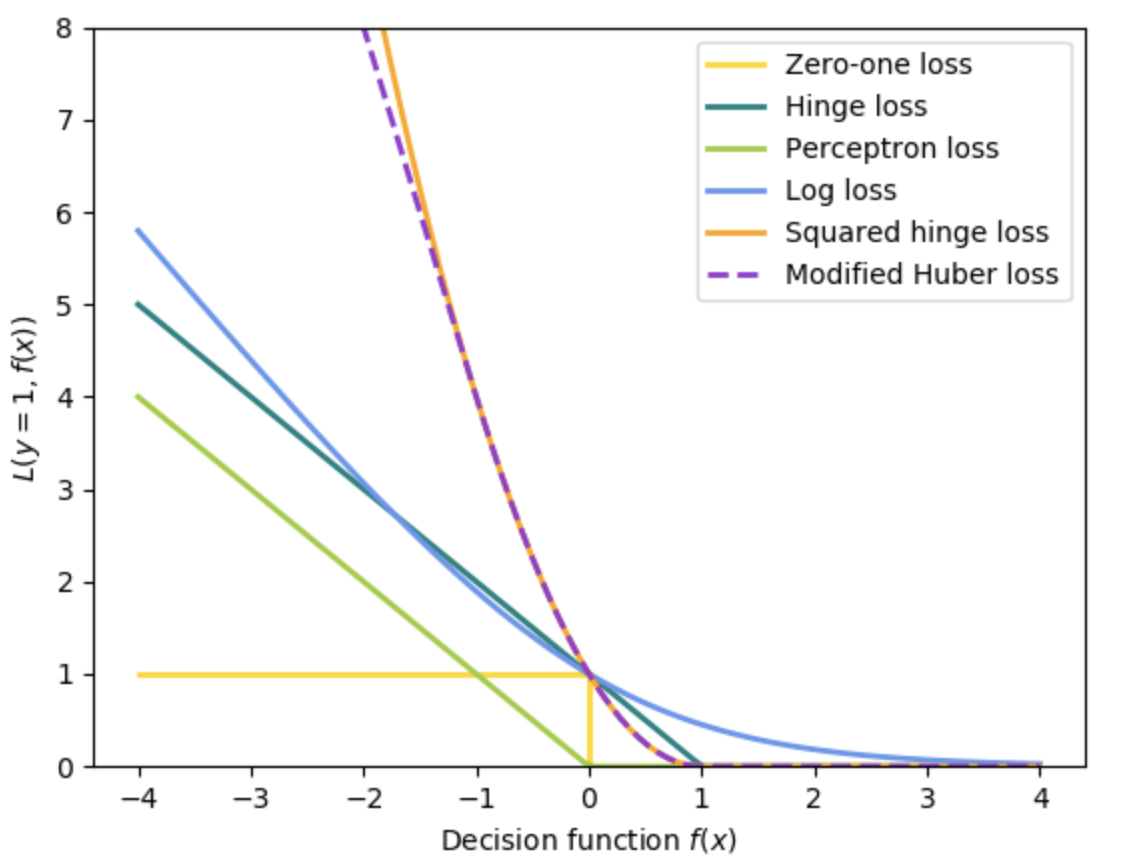

- List 7 Classification Loss Functions

- \(0-1\) Loss

- Square Loss

- Hinge Loss

- Logistic Loss

- Cross-Entropy

- Exponential Loss

- Perceptron Loss

- \(0-1\) loss

- What does it minimize:

It measures accuracy, and minimizes mis-classification error/rate. - Formula:

$$L(\hat{y}, y) = I(\hat{y} \neq y) = \left\{\begin{array}{ll}{0} & {\hat{y}=y} \\ {1} & {\hat{y} \neq y}\end{array}\right.$$

- Graph:

- What does it minimize:

- MSE

- Formula:

$$L(\hat{y}, y) = (1-y \hat{y})^{2}$$

- Graph:

- Derivation (for classification) - give assumptions:

We can write the loss in terms of the margin \(m = y\hat{y}\):

\(L(\hat{y}, y)=(y - \hat{y})^{2}=(1-y\hat{y})^{2}=(1-m)^{2}\)Since \(y \in {-1,1} \implies y^2 = 1\)

- Properties:

- Convex

- Smooth

- Sensitive to outliers: Penalizes outliers excessively

- ^Slower Convergence Rate (wrt sample complexity) than logistic or hinge loss

- Functions which yield high values of \(f({\vec {x}})\) for some \(x\in X\) will perform poorly with the square loss function, since high values of \(yf({\vec {x}})\) will be penalized severely, regardless of whether the signs of \(y\) and \(f({\vec {x}})\) match.

- Formula:

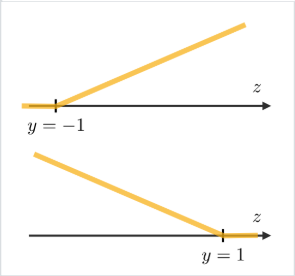

- Hinge Loss

- What does it minimize:

It minimizes missclassification wrt penetrating a margin \(\rightarrow\) maximizes a margin. - Formula:

$$L(\hat{y}, y) = \max (0,1-y \hat{y})=|1-y \hat{y}|_ {+}$$

- Graph:

- Properties:

- Continuous, Convex, Non-Differentiable

- The hinge loss provides a relatively tight, convex upper bound on the \(0–1\) indicator function

- Describe the properties of the Hinge loss and why it is used?

- Hinge loss upper bounds 0-1 loss

- It is the tightest convex upper bound on the 0/1 loss

- Minimizing 0-1 loss is NP-hard in the worst-case

- What does it minimize:

- Logistic Loss

- AKA:

Log-Loss, Logarithmic Loss - What does it minimize:

Minimizes the Kullback-Leibler divergence between the empirical distribution and the predicted distribution. - Formula:

$$L(\hat{y}, y) = \log{\left(1+e^{-y \hat{y}}\right)}$$

- Graph:

- Derivation:

We get the likelihood of the dataset \(\mathcal{D}=\left(\mathbf{x}_{1}, y_{1}\right), \ldots,\left(\mathbf{x}_{N}, y_{N}\right)\):$$\prod_{n=1}^{N} P\left(y_{n} | \mathbf{x}_{n}\right) =\prod_{n=1}^{N} \theta\left(y_{n} \mathbf{w}^{\mathrm{T}} \mathbf{x}_ {n}\right)$$

- Maximize:

$$\prod_{n=1}^{N} \theta\left(y_{n} \mathbf{w}^{\top} \mathbf{x}_ {n}\right)$$

- Take the natural log to avoid products:

$$\ln \left(\prod_{n=1}^{N} \theta\left(y_{n} \mathbf{w}^{\top} \mathbf{x}_ {n}\right)\right)$$

Motivation:

- The inner quantity is non-negative and non-zero.

- The natural log is monotonically increasing (its max, is the max of its argument)

- Take the average (still monotonic):

$$\frac{1}{N} \ln \left(\prod_{n=1}^{N} \theta\left(y_{n} \mathbf{w}^{\top} \mathbf{x}_ {n}\right)\right)$$

- Take the negative and Minimize:

$$-\frac{1}{N} \ln \left(\prod_{n=1}^{N} \theta\left(y_{n} \mathbf{w}^{\top} \mathbf{x}_ {n}\right)\right)$$

- Simplify:

$$=\frac{1}{N} \sum_{n=1}^{N} \ln \left(\frac{1}{\theta\left(y_{n} \mathbf{w}^{\tau} \mathbf{x}_ {n}\right)}\right)$$

- Substitute \(\left[\theta(s)=\frac{1}{1+e^{-s}}\right]\):

$$\frac{1}{N} \sum_{n=1}^{N} \underbrace{\ln \left(1+e^{-y_{n} \mathbf{w}^{\top} \mathbf{x}_{n}}\right)}_{e\left(h\left(\mathbf{x}_{n}\right), y_{n}\right)}$$

- Use this as the Cross-Entropy Error Measure:

$$E_{\mathrm{in}}(\mathrm{w})=\frac{1}{N} \sum_{n=1}^{N} \underbrace{\ln \left(1+e^{-y_{n} \mathrm{w}^{\top} \mathbf{x}_{n}}\right)}_{\mathrm{e}\left(h\left(\mathrm{x}_{n}\right), y_{n}\right)}$$

- Maximize:

- Properties:

- Convex

- Grows linearly for negative values which make it less sensitive to outliers

- The logistic loss function does not assign zero penalty to any points. Instead, functions that correctly classify points with high confidence (i.e., with high values of \({\displaystyle \vert f({\vec {x}})\vert }\)) are penalized less. This structure leads the logistic loss function to be sensitive to outliers in the data.

- AKA:

- Cross-Entropy

- What does it minimize:

It minimizes the Kullback-Leibler divergence between the empirical distribution and the predicted distribution. - Formula:

$$L(\hat{y}, y) = -\sum_{i} y_i \log \left(\hat{y}_ {i}\right)$$

- Binary Cross-Entropy:

$$L(\hat{y}, y) = -\left[y \log \hat{y}+\left(1-y\right) \log \left(1-\hat{y}_ {n}\right)\right]$$

- Graph:

- CE and Negative-Log-Probability:

The Cross-Entropy is equal to the Negative-Log-Probability (of predicting the true class) in the case that the true distribution that we are trying to match is peaked at a single point and is identically zero everywhere else; this is usually the case in ML when we are using a one-hot encoded vector with one class \(y = [0 \: 0 \: \ldots \: 0 \: 1 \: 0 \: \ldots \: 0]\) peaked at the \(j\)-th position

\(\implies\)$$L(\hat{y}, y) = -\sum_{i} y_i \log \left(\hat{y}_ {i}\right) = - \log (\hat{y}_ {j})$$

- CE and Log-Loss:

Given \(p \in\{y, 1-y\}\) and \(q \in\{\hat{y}, 1-\hat{y}\}\):$$H(p,q)=-\sum_{x }p(x)\,\log q(x) = -y \log \hat{y}-(1-y) \log (1-\hat{y}) = L(\hat{y}, y)$$

-

Derivation:

Given:

- \(\hat{y} = \sigma(yf(x))\),

- \(y \in \{-1, 1\}\),

- \(\hat{y}' = \sigma(f(x))\),

- \[y' = (1+y)/2 = \left\{\begin{array}{ll}{1} & {\text {for }} y' = 1 \\ {0} & {\text {for }} y = -1\end{array}\right. \in \{0, 1\}\]

- We start with the modified binary cross-entropy

\(\begin{aligned} -y' \log \hat{y}'-(1-y') \log (1-\hat{y}') &= \left\{\begin{array}{ll}{-\log\hat{y}'} & {\text {for }} y' = 1 \\ {-\log(1-\hat{y}')} & {\text {for }} y' = 0\end{array}\right. \\ \\ &= \left\{\begin{array}{ll}{-\log\sigma(f(x))} & {\text {for }} y' = 1 \\ {-\log(1-\sigma(f(x)))} & {\text {for }} y' = 0\end{array}\right. \\ \\ &= \left\{\begin{array}{ll}{-\log\sigma(1\times f(x))} & {\text {for }} y' = 1 \\ {-\log(\sigma((-1)\times f(x)))} & {\text {for }} y' = 0\end{array}\right. \\ \\ &= \left\{\begin{array}{ll}{-\log\sigma(yf(x))} & {\text {for }} y' = 1 \\ {-\log(\sigma(yf(x)))} & {\text {for }} y' = 0\end{array}\right. \\ \\ &= \left\{\begin{array}{ll}{-\log\hat{y}} & {\text {for }} y' = 1 \\ {-\log\hat{y}} & {\text {for }} y' = 0\end{array}\right. \\ \\ &= -\log\hat{y} \\ \\ &= \log\left[\dfrac{1}{\hat{y}}\right] \\ \\ &= \log\left[\hat{y}^{-1}\right] \\ \\ &= \log\left[\sigma(yf(x))^{-1}\right] \\ \\ &= \log\left[ \left(\dfrac{1}{1+e^{-yf(x)}}\right)^{-1}\right] \\ \\ &= \log \left(1+e^{-yf(x)}\right)\end{aligned}\)

-

- CE and KL-Div:

When comparing a distribution \({\displaystyle q}\) against a fixed reference distribution \({\displaystyle p}\), cross entropy and KL divergence are identical up to an additive constant (since \({\displaystyle p}\) is fixed): both take on their minimal values when \({\displaystyle p=q}\), which is \({\displaystyle 0}\) for KL divergence, and \({\displaystyle \mathrm {H} (p)}\) for cross entropy.Basically, minimizing either will result in the same solution.

- What does it minimize:

- Exponential Loss

- Formula:

$$L(\hat{y}, y) = e^{-\beta y \hat{y}}$$

- Properties:

- Convex

- Grows Exponentially for negative values making it more sensitive to outliers

- It penalizes incorrect predictions more than Hinge loss and has a larger gradient.

- Used in AdaBoost algorithm

- Formula:

- Perceptron Loss

- Formula:

$${\displaystyle L(z, y_i) = {\begin{cases}0&{\text{if }}\ y_i\cdot z_i \geq 0\\-y_i z&{\text{otherwise}}\end{cases}}}$$

- Formula:

- Analysis

- Logistic vs Hinge Loss:

Logistic loss diverges faster than hinge loss (image). So, in general, it will be more sensitive to outliers. Reference. Bad info? - Cross-Entropy vs MSE:

Basically, CE > MSE because the gradient of MSE \(z(1-z)\) leads to saturation when then output \(z\) of a neuron is near \(0\) or \(1\) making the gradient very small and, thus, slowing down training.

CE > Class-Loss because Class-Loss is binary and doesn’t take into account “how well” are we actually approximating the probabilities as opposed to just having the target class be slightly higher than the rest (e.g. \([c_1=0.3, c_2=0.3, c_3=0.4]\)).

- Logistic vs Hinge Loss:

Information Theory

-

What is Information Theory? In the context of ML?

Information theory is a branch of applied mathematics that revolves around quantifying how much information is present in a signal.In the context of machine learning, we can also apply information theory to continuous variables where some of these message length interpretations do not apply, instead, we mostly use a few key ideas from information theory to characterize probability distributions or to quantify similarity between probability distributions.

-

Describe the Intuition for Information Theory. Intuitively, how does the theory quantify information (list)?

The basic intuition behind information theory is that learning that an unlikely event has occurred is more informative than learning that a likely event has occurred. A message saying “the sun rose this morning” is so uninformative as to be unnecessary to send, but a message saying “there was a solar eclipse this morning” is very informative.Thus, information theory quantifies information in a way that formalizes this intuition:

- Likely events should have low information content - in the extreme case, guaranteed events have no information at all

- Less likely events should have higher information content

- Independent events should have additive information. For example, finding out that a tossed coin has come up as heads twice should convey twice as much information as finding out that a tossed coin has come up as heads once.

- Measuring Information - Definitions and Formulas:

- In Shannons Theory, how do we quantify “transmitting 1 bit of information”?

To transmit \(1\) bit of information means to divide the recipients Uncertainty by a factor of \(2\). - What is the amount of information transmitted?

The amount of information transmitted is the logarithm (base \(2\)) of the uncertainty reduction factor. - What is the uncertainty reduction factor?

It is the inverse of the probability of the event being communicated. - What is the amount of information in an event \(x\)?

The amount of information in an event \(\mathbf{x} = x\), called the Self-Information is:$$I(x) = \log (1/p(x)) = -\log(p(x))$$

- In Shannons Theory, how do we quantify “transmitting 1 bit of information”?

- Define the Self-Information - Give the formula:

The Self-Information or surprisal is a synonym for the surprise when a random variable is sampled.

The Self-Information of an event \(\mathrm{x} = x\):$$I(x) = - \log P(x)$$

- What is it defined with respect to?

Self-information deals only with a single outcome.

- What is it defined with respect to?

-

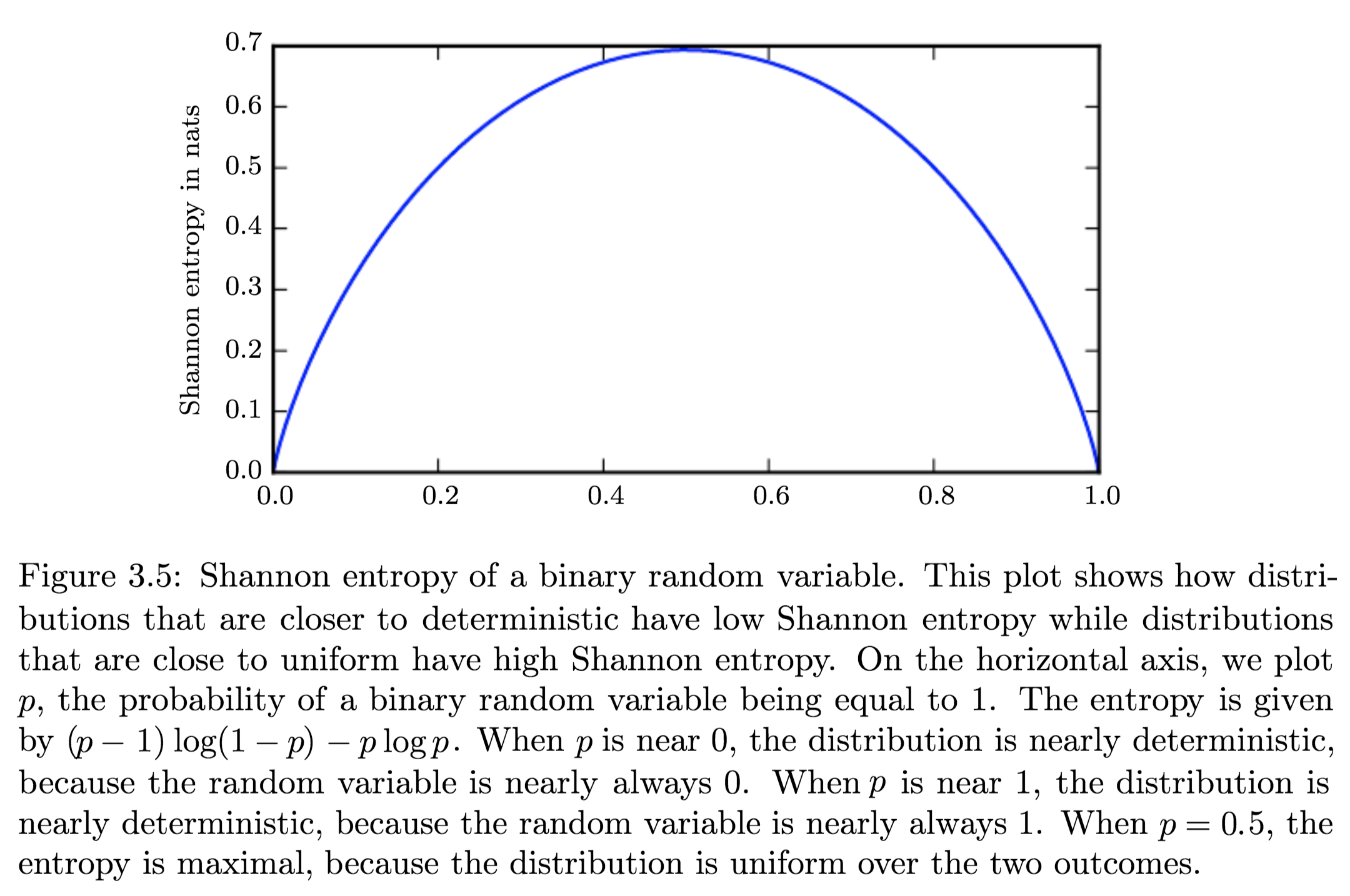

Define Shannon Entropy - What is it used for?

Shannon Entropy is defined as the average amount of information produced by a stochastic source of data.

Equivalently, the amount of information that you get from one sample drawn from a given probability distribution \(p\).To quantify the amount of uncertainty in an entire probability distribution, we use Shannon Entropy.

$$H(x) = {\displaystyle \operatorname {E}_{x \sim P} [I(x)]} = - {\displaystyle \operatorname {E}_{x \sim P} [\log P(X)] = -\sum_{i=1}^{n} p\left(x_{i}\right) \log p\left(x_{i}\right)}$$

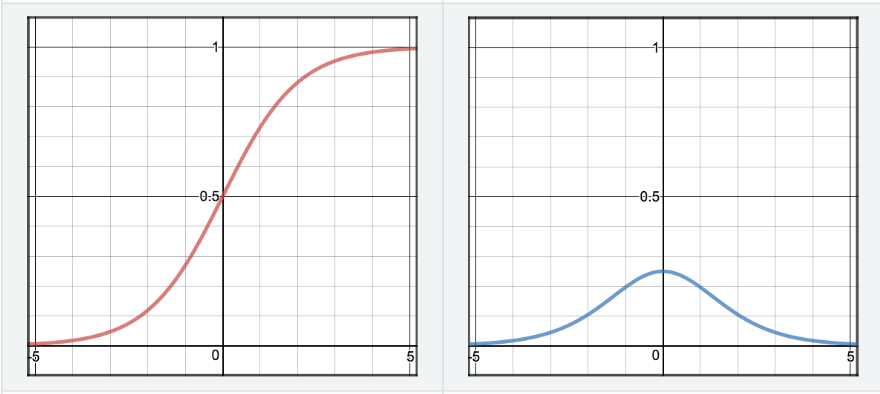

- Describe how Shannon Entropy relate to distributions with a graph:

- Describe how Shannon Entropy relate to distributions with a graph:

- Define Differential Entropy:

Differential Entropy is Shannons entropy of a continuous random variable \(x\) - How does entropy characterize distributions?

Distributions that are nearly deterministic (where the outcome is nearly certain) have low entropy; distributions that are closer to uniform have high entropy. -

Define Relative Entropy - Give it’s formula:

The Kullback–Leibler divergence (Relative Entropy) is a measure of how one probability distribution diverges from a second, expected probability distribution.Mathematically:

$${\displaystyle D_{\text{KL}}(P\parallel Q)=\operatorname{E}_{x \sim P} \left[\log \dfrac{P(x)}{Q(x)}\right]=\operatorname{E}_{x \sim P} \left[\log P(x) - \log Q(x)\right]}$$

- Discrete:

$${\displaystyle D_{\text{KL}}(P\parallel Q)=\sum_{i}P(i)\log \left({\frac {P(i)}{Q(i)}}\right)}$$

- Continuous:

$${\displaystyle D_{\text{KL}}(P\parallel Q)=\int_{-\infty }^{\infty }p(x)\log \left({\frac {p(x)}{q(x)}}\right)\,dx,}$$

- Give an interpretation:

- Discrete variables:

It is the extra amount of information needed to send a message containing symbols drawn from probability distribution \(P\), when we use a code that was designed to minimize the length of messages drawn from probability distribution \(Q\).

- Discrete variables:

- List the properties:

- Non-Negativity:

\({\displaystyle D_{\mathrm {KL} }(P\|Q) \geq 0}\) - \({\displaystyle D_{\mathrm {KL} }(P\|Q) = 0 \iff}\) \(P\) and \(Q\) are:

- Discrete Variables:

the same distribution - Continuous Variables:

equal “almost everywhere”

- Discrete Variables:

- Additivity of Independent Distributions:

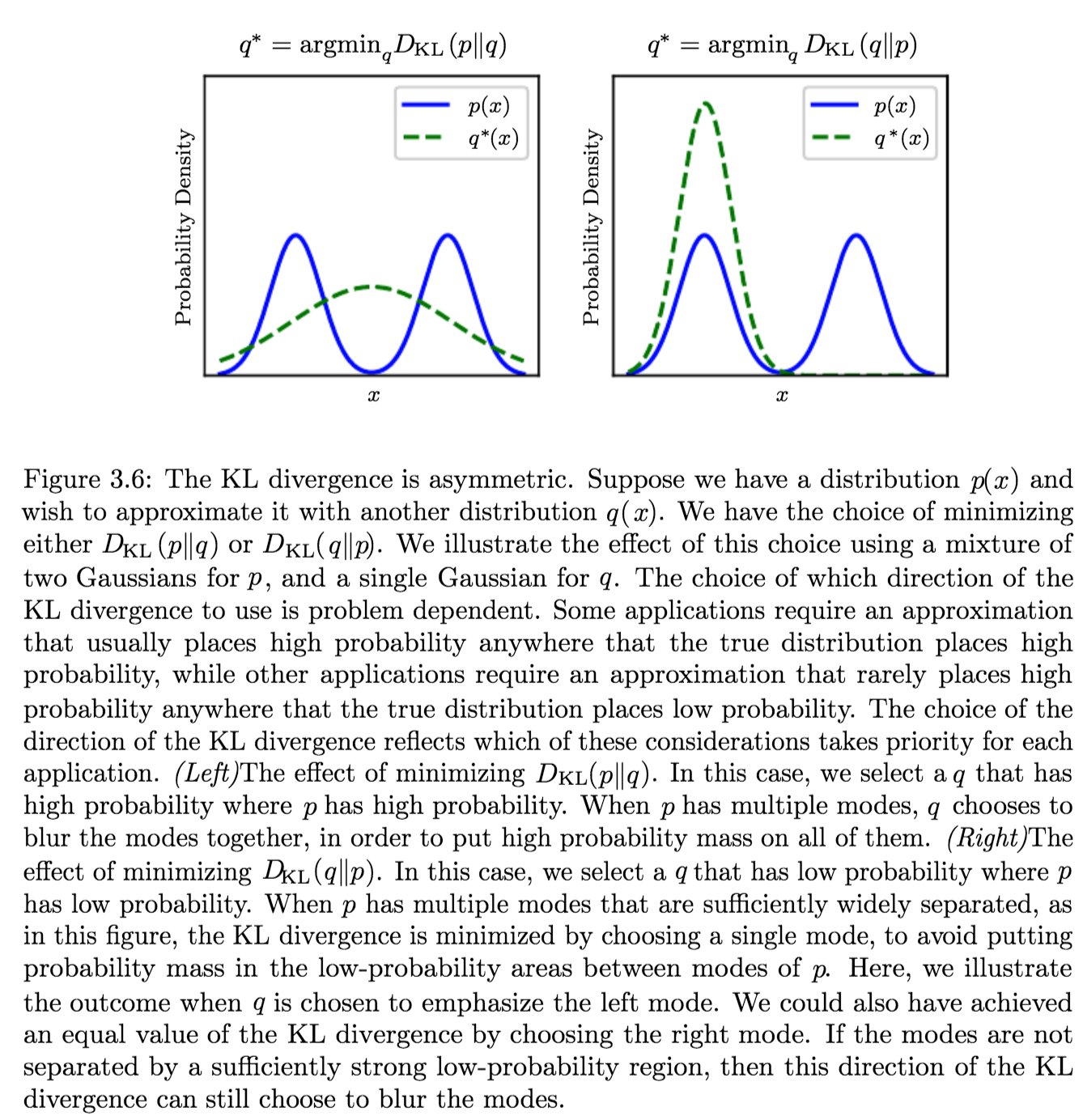

\({\displaystyle D_{\text{KL}}(P\parallel Q)=D_{\text{KL}}(P_{1}\parallel Q_{1})+D_{\text{KL}}(P_{2}\parallel Q_{2}).}\) - \({\displaystyle D_{\mathrm {KL} }(P\|Q) \neq D_{\mathrm {KL} }(Q\|P)}\)

This asymmetry means that there are important consequences to the choice of the ordering

- Convexity in the pair of PMFs \((p, q)\) (i.e. \({\displaystyle (p_{1},q_{1})}\) and \({\displaystyle (p_{2},q_{2})}\) are two pairs of PMFs):

\({\displaystyle D_{\text{KL}}(\lambda p_{1}+(1-\lambda )p_{2}\parallel \lambda q_{1}+(1-\lambda )q_{2})\leq \lambda D_{\text{KL}}(p_{1}\parallel q_{1})+(1-\lambda )D_{\text{KL}}(p_{2}\parallel q_{2}){\text{ for }}0\leq \lambda \leq 1.}\)

- Non-Negativity:

- Describe it as a distance:

Because the KL divergence is non-negative and measures the difference between two distributions, it is often conceptualized as measuring some sort of distance between these distributions.

However, it is not a true distance measure because it is not symmetric.KL-div is, however, a Quasi-Metric, since it satisfies all the properties of a distance-metric except symmetry

- List the applications of relative entropy:

Characterizing:- Relative (Shannon) entropy in information systems

- Randomness in continuous time-series

- Information gain when comparing statistical models of inference

- How does the direction of minimization affect the optimization:

Suppose we have a distribution \(p(x)\) and we wish to approximate it with another distribution \(q(x)\).

We have a choice of minimizing either:- \({\displaystyle D_{\text{KL}}(p\|q)} \implies q^\ast = \operatorname {arg\,min}_q {\displaystyle D_{\text{KL}}(p\|q)}\)

Produces an approximation that usually places high probability anywhere that the true distribution places high probability. - \({\displaystyle D_{\text{KL}}(q\|p)} \implies q^\ast \operatorname {arg\,min}_q {\displaystyle D_{\text{KL}}(q\|p)}\)

Produces an approximation that rarely places high probability anywhere that the true distribution places low probability.which are different due to the asymmetry of the KL-divergence

- \({\displaystyle D_{\text{KL}}(p\|q)} \implies q^\ast = \operatorname {arg\,min}_q {\displaystyle D_{\text{KL}}(p\|q)}\)

- Define Cross Entropy - Give it’s formula:

The Cross Entropy between two probability distributions \({\displaystyle p}\) and \({\displaystyle q}\) over the same underlying set of events measures the average number of bits needed to identify an event drawn from the set, if a coding scheme is used that is optimized for an “unnatural” probability distribution \({\displaystyle q}\), rather than the “true” distribution \({\displaystyle p}\).$$H(p,q) = \operatorname{E}_{p}[-\log q]= H(p) + D_{\mathrm{KL}}(p\|q) =-\sum_{x }p(x)\,\log q(x)$$

- What does it measure?

The average number of bits that need to be transmitted using a different probability distribution \(q\) (for encoding) than the “true” distribution \(p\), to convey the information in \(p\). - How does it relate to relative entropy?

It is similar to KL-Div but with an additional quantity - the entropy of \(p\). - When are they equivalent?

Minimizing the cross-entropy with respect to \(Q\) is equivalent to minimizing the KL divergence, because \(Q\) does not participate in the omitted term.

- What does it measure?

Recommendation Systems

- Describe the different algorithms for recommendation systems:

Ensemble Learning

- What are the two paradigms of ensemble methods?

- Parallel

- Sequential

- Random Forest VS GBM?

The fundamental difference is, random forest uses bagging technique to make predictions. GBM uses boosting techniques to make predictions.

Data Processing and Analysis

- What are 3 data preprocessing techniques to handle outliers?

- Winsorizing/Winsorization (cap at threshold).

- Transform to reduce skew (using Box-Cox or similar).

- Remove outliers if you’re certain they are anomalies or measurement errors.

- Describe the strategies to dimensionality reduction?

- Feature Selection

- Feature Projection/Extraction

- What are 3 ways of reducing dimensionality?

- Removing Collinear Features

- Performing PCA, ICA, etc.

- Feature Engineering

- AutoEncoder

- Non-negative matrix factorization (NMF)

- LDA

- MSD

- List methods for Feature Selection

- Variance Threshold: normalize first (variance depends on scale)

- Correlation Threshold: remove the one with larger mean absolute correlation with other features.

- Genetic Algorithms

- Stepwise Search: bad performance, regularization much better, it’s a greedy algorithm (can’t account for future effects of each change)

- LASSO, Elastic-Net

- List methods for Feature Extraction

- PCA, ICA, CCA

- AutoEncoders

- LDA: LDA is a supervised linear transformation technique since the dependent variable (or the class label) is considered in the model. It Extracts the k new independent variables that maximize the separation between the classes of the dependent variable.

- Linear discriminant analysis is used to find a linear combination of features that characterizes or separates two or more classes (or levels) of a categorical variable.

- Unlike PCA, LDA extracts the k new independent variables that maximize the separation between the classes of the dependent variable. LDA is a supervised linear transformation technique since the dependent variable (or the class label) is considered in the model.

- Latent Semantic Analysis

- Isomap

- How to detect correlation of “categorical variables”?

- Chi-Squared test: it is a statistical test applied to the groups of categorical features to evaluate the likelihood of correlation or association between them using their frequency distribution.

- Feature Importance

- Use linear regression and select variables based on \(p\) values

- Use Random Forest, Xgboost and plot variable importance chart

- Lasso

- Measure information gain for the available set of features and select top \(n\) features accordingly.

- Use Forward Selection, Backward Selection, Stepwise Selection

- Remove the correlated variables prior to selecting important variables

- In linear models, feature importance can be calculated by the scale of the coefficients

- In tree-based methods (such as random forest), important features are likely to appear closer to the root of the tree. We can get a feature’s importance for random forest by computing the averaging depth at which it appears across all trees in the forest

- Capturing the correlation between continuous and categorical variable? If yes, how?

Yes, we can use ANCOVA (analysis of covariance) technique to capture association between continuous and categorical variables. - What cross validation technique would you use on time series data set?

Forward chaining strategy with k folds. - How to deal with missing features? (Imputation?)

- Assign a unique category to missing values, who knows the missing values might decipher some trend.

- Remove them blatantly

- we can sensibly check their distribution with the target variable, and if found any pattern we’ll keep those missing values and assign them a new category while removing others.

- Do you suggest that treating a categorical variable as continuous variable would result in a better predictive model?

For better predictions, categorical variable can be considered as a continuous variable only when the variable is ordinal in nature. - What are collinearity and multicollinearity?

- Collinearity occurs when two predictor variables (e.g., \(x_1\) and \(x_2\)) in a multiple regression have some correlation.

- Multicollinearity occurs when more than two predictor variables (e.g., \(x_1, x_2, \text{ and } x_3\)) are inter-correlated.

ML/Statistical Models

- What are parametric models?

Parametric models are those with a finite number of parameters. To predict new data, you only need to know the parameters of the model. Examples include linear regression, logistic regression, and linear SVMs. - What is a classifier?

A function that maps…

K-NN

PCA

- What is PCA?

It is a statistical procedure that uses an orthogonal transformation to convert a set of observations of possibly correlated variables into a set of values of linearly uncorrelated variables called principal components. - What is the Goal of PCA?

Given points \(\mathbf{x}_ i \in \mathbf{R}^d\), find k-directions that capture most of the variation. - List the applications of PCA:

- Find a small basis for representing variations in complex things.

e.g. faces, genes.

- Reducing the number of dimensions makes some computations cheaper.